Any casual reader of trivia will be aware that I care about my privacy and that I go to some lengths to maintain that privacy in the face of concerted attempts by ISPs, corporations, government agencies and others to subvert it. In particular I use personally managed OpenVPN servers at various locations to tunnel my network activity and thus mask it from my ISP’s surveillance. I also use Tor (over those same VPNs), I use XMPP (and latterly Signal) for my messaging and my mobile ‘phone is resolutely non-google because I use lineageos‘ version of android (though that still has holes – it is difficult to be completely free of google if you use an OS developed by them). Unfortunately my email is still largely unprotected, but I treat that medium as if it were correspondence by postcard and simply accept the risks inherent in its use. I like encryption, and I particularly like strong encryption which offers forward secrecy (as is provided by TLS, and unlike that offered by PGP) and will therefore use encryption wherever possible to protect my own and my family member’s usage of the ‘net.

Back in June of last year, I wrote about the problems caused by relying on third party DNS resolvers and how I decided to use my own unbound servers instead. I now run unbound on several of my VMs (in particular at my OpenVPN endpoints) and point my internal caching dnsmasq resolvers to those external recursive resolvers. This minimises my exposure to external DNS surveillance, but of course since my DNS requests themselves are in clear, any observer on the network path(s) between my internal networks and my external unbound servers (or between those servers and the root servers or other authoritative domain servers) would still be able to snoop. My DNS requests from my internal servers /should/ be protected by the OpenVPN tunnels to my unbound servers, but only if I can guarantee that those requests actually go to the servers I expect (and not to one of the others I have specified in my dnsmasq resolver lists). I attempt to mitigate this possibility on my internal network by using OpenVPN’s server configuration options

push “redirect-gateway def1”

and

push “dhcp-option DNS 123.123.123.123”

but my network architecture (see below) and my iptables rules on my external servers started to make this complicated and (potentially) unreliable. Simplicity is easier to maintain, and usually safer. Certainly I am less likely to make a configuration mistake if I keep things simple.

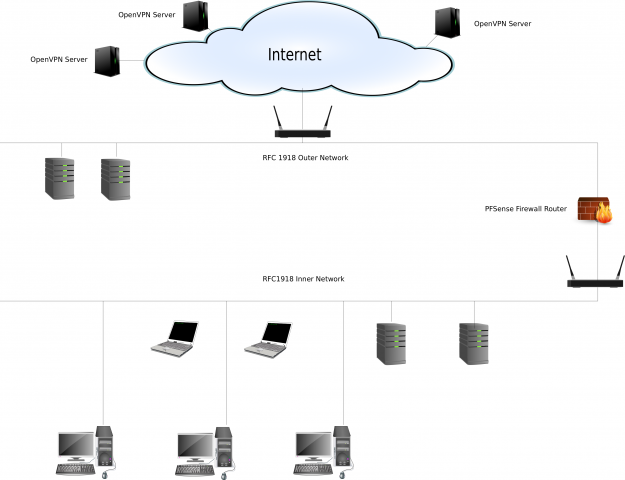

Part of my problem stems from the fact that I have two separate internal networks (well, three if you count my guest WiFi net) each with their own security policy stance and their own dnsmasq resolvers. Worse, the external network (deliberately and consciously) does not use my OpenVPN tunnels and my mobile devices could (and often do) connect to either of the two networks. Since both my dnsmasq resolvers point to the same list of external unbound servers this necessarily complicates the iptables rules on my servers which have to allow inbound connections from both my external ISP provided IP address and all of my OpenVPN endpoints. When thinking through my connectivity I found that I could not always guarantee the route my DNS requests would take, or which unbound server would respond. All this is made worse by my tendency to swap VPN endpoints or add new ones as the whim (and price of VMs) takes me.

Yes, a boy needs a hobby, but not an unnecessarily complicated one……

On reflection I concluded that my maintenance task would be eased if I could rely on just one or two external resolvers and find some other way to protect my DNS requests from snooping. The obvious solution here would be encryption of all DNS requests leaving my local networks (I have to trust those or I am completely lost). But how?

At the time of writing, there are three separate mechanisms for encrypting DNS, DNScrypt, DNS over HTTPS (DoH) and DNS over TLS (DoT).

The first of these has never been an internet standard, indeed it has never been offered as a standard, but it was implemented, and publicly offered back in 2011 by OpenDNS. Personally I would shy away from using any non-standard protocol, particularly one which was not widely adopted, and very particularly one which was offered by OpenDNS. That company (alongside others such as Quad9 and Cleanbrowsing) market themselves as offering “filtered” DNS. I don’t like that.

Of the other two, both have RFCs describing them as internet standards, DoH in RFC 8484 and DoT in RFCs 7858 and 8310 but there is some (fairly widespread) disagreement over which protocol is “best”. This ZDNET article from October last year gives a good exposition of the arguments. That article comes down heavily against DoH and in favour of DoT. In particular it says that:

- DoH doesn’t actually prevent ISPs user tracking

- DoH creates havoc in the enterprise sector

- DoH weakens cyber-security

- DoH helps criminals

- DoH shouldn’t be recommended to dissidents

- DoH centralizes DNS traffic at a few DoH resolvers

And concludes:

The TL;DR is that most experts think DoH is not good, and people should be focusing their efforts on implementing better ways to encrypt DNS traffic — such as DNS-over-TLS — rather than DoH.

When people like Paul Vixie describe DoH in terms such as:

Rfc 8484 is a cluster duck for internet security. Sorry to rain on your parade. The inmates have taken over the asylum.

and

DoH is an over the top bypass of enterprise and other private networks. But DNS is part of the control plane, and network operators must be able to monitor and filter it. Use DoT, never DoH.

and Richard Bejtilch of TAO Security says:

DoH is an unfortunate answer to a complicated problem. I personally prefer DoT (DNS over TLS). Putting an OS-level function like name resolution in the hands of an application via DoH is a bad idea. See what @paulvixie has been writing for the most informed commentary.

I tend to take the view that perhaps DoH may not be the best way forward and I should look at DoT solutions.

Interestingly though, Theodore Ts’o replied to Paul Vixie in the second quote above that:

Unfortunately, these days more often than not I consider network operators to be a malicious man-in-the-middle actor instead of a service provider. These days I’m more often going to use IPSEC to insulate myself from the network operator, but diversity of defenses is good. :-)

and I have a lot of sympathy with that view. For example, that is exactly why I wrap my own network activity in as much protective encryption as I can. I don’t trust my local ISP.

In another discussion in a reddit thread, Bill Woodcock (Executive Director of Packet Clearing House and Chair of the Board of Quad 9) said:

[DNSCrypt] is not an IETF standard, so it’s not terribly widely implemented.

and

DNS-over-HTTPS is an ugly hack, to try to camouflage DNS queries as web queries.

and went on

DNS-over-TLS is an actual IETF standard, with a lot of interoperability work behind it. As a consequence of that, it’s the most widely supported in software, of the three options. DNS-over-TLS is the primary encryption method that Quad9 supports.

Whilst I might not like the way Quad9 handles its public DNS resolvers (and personally I wouldn’t use them), I can’t disagree with Woodcock’s conclusion.

My own view is that DoH looks very much like a bodge, and a possibly dangerous one at that. I’ve been a sysadmin in a corporate environment and I know that I would be very unhappy knowing that my users could bypass my local DNS resolvers at application level and mask their outgoing DNS requests as HTTPS web traffic. Indeed, when Mozilla announced its decision last year to include DoH within Firefox it caused some concern within both UK Central Government and the UK’s Internet Service Providers Association. Here I am slightly conflicted, however, because I can see exactly why that masking is attractive to the end user. For example, I sometimes run OpenVPN tunnels over TCP on port 443, rather than the default UDP to port 1194 for exactly the same reasons – camouflage and firewall bypass. And of course I use Tor. Mozilla reportedly bowed to UK pressure and did not (and have not) activated DoH by default in UK versions of Firefox. But it is not terribly difficult to activate should you so wish.

One of my main concerns over the use of a protocol which operates at the application layer, and ignores the network directives, is that those applications could, and probably would, come with a set of hard-coded DoH servers. Those servers could be hostile, or even if not directly hostile, they could be subverted by hostile entities for malicious purposes, or they could just fail. Mozilla itself hard-codes Cloudflare’s DoH servers into Firefox, but you could of course change that to any one or more of the servers on this list. The hard-coding of Cloudflare could cause Firefox users problems in future if that service were to fail. An article in the Register pointed to a failure in the F root server in February of this year caused by a faulty BGP advertisement connected with Cloudflare. As the Reg pointed out:

If a software bug in closed Cloudflare software can cause a root server to vanish an entire, significant piece of the internet then it is all too possible – in fact, likely – that at some point a similar issue will cause Firefox users to lose their secure DNS connections. And that could cause them to lose the internet altogether (it would still be there, but most users would have no idea what the cause was or how to get around it.)

Of course Mozilla isn’t the only browser provider to offer DoH. As the Register pointed out in November last year, both Google (with Chrome) and Microsoft (with Edge) are rolling out their own implementations. I’m with the Reg in being concerned about the centralisation of knowledge about DNS lookups this necessarily entails. If browsers (which after all are the critical application most used to access the web) all end up doing their DNS lookups by default to central servers controlled by a very few companies – and moreover companies which may have an inherent interest in monetising that information, then we will have lost a lot of the freedom, and privacy, that the proponents of the DoH protocol purport to support.

So DoT is the way to go for me. And I’ll cover how I did that in my next post.

4 comments

Skip to comment form

Hi. You say you don’t “like the way Quad9 handles its public DNS resolvers.” Can you elaborate, and suggest what you’d do differently? Thanks.

Author

Hi Bill

Thanks for the query. I think I have already answered this in an earlier post. See https://baldric.net/2019/06/26/one-unbound-and-you-are-free/. As that post explains, I expect a DNS server simply to answer my queries, and not to examine those queries before making a decision about how to reply. Quad 9 markets its service as a “secure DNS”. It’s own opening page at https://quad9.net/ has the banner “Search a domain to see if we are blocking it”, and the FAQ at https://www.quad9.net/faq/ says:

and

and

So, Quad9 actively interferes with DNS requests, blocks certain hosts by returning a spurious NXDOMAIN response and logs DNS requests. All of that may be perfectly acceptable to some people, particularly if they actively wish to opt in to that sort of filtering. I don’t.

Agreed, the FAQ also points users to the “unsecured” servers

but there is no mention of the logging policy for those servers and I like complete transparency.

Cheers

Mick

So, to summarize:

1) You think we should remove the malware blocking option, so people who want malware blocking can no longer have it.

2) You want us to further clarify that when we say we don’t log DNS requests, we mean that we don’t log DNS requests ever, under any circumstances.

I don’t have any problem with #2, though it could get tiresome if carried to the extreme. What’s your rationale for #1? If some people want malware blocking, why should they not be allowed to have it?

-Bill

Author

Hi Bill

Thanks for another comment.

1. No, I do not think that, and I did not say that. I said “All of that may be perfectly acceptable to some people, particularly if they actively wish to opt in to that sort of filtering. I don’t.” And as I have said in earlier posts, I’m a purist. I expect a DNS service simply to answer my queries, not to make decisions about whether those queries should be answered. If I were a network administrator or security officer charged with protecting a corporate network it is likely that I /would/ look at something like Quad9’s DNS offering to add to my protective policy options (together with whatever boundary devices such as application level proxies/filters, email spam/phishing checks etc. I had in place). I’m not, I am a private individual. But even if I were, I would want greater visibility of the checks (and subsequent blocks) which were being made on my behalf.

Call me paranoid if you like (some of my friends do) but I dislike any attempts by third parties to make decisions about my network usage. I particularly dislike the way it is becoming increasingly difficult to remain private, anonymous and untracked on the ‘net (and I have been a net user for a long time.) I recognise that your organisation has a better approach to privacy than does say google (it would be difficult to be worse), but you are still providing (and actively marketing) a service which lacks complete transparency. Don’t get me wrong, I actually think that your service may be a good thing for many net users – it’s just not right for me.

Here in the UK we have a quite draconian system of state imposed surveillance (and I say that advisedly). I don’t like that, and nor do I like the march of what Shoshana Zuboff calls “surveillance capitalism” so I do what I can to avoid it. It would therefore not make much sense for me to use a DNS resolving system which actively intervenes in my net usage, regardless of how well meant that intervention may be.

On 2) yes, I would welcome such clarity.

Thanks again for the response. It has made me look harder at what you offer and I appreciate that.

Mick

A couple more points just occurred to me. Firstly, notwithstanding my earlier expressed dislike of interception of DNS and re-direction to a website page owned by the DNS provider (a la Verisign in the early part of the 2000s) I actually think it would be a good idea if Quad9 did just that in cases of hits on the threat intelligence supplied domains. Whilst I still think in principle that such interception is a bad idea, I also think it much preferable (and certainly more transparent) that the user should get a page saying something like “Access to this domain has been blocked because it appears in our threat intelligence database. If you think this is incorrect, please contact us at….” than simply receiving an erroneous NXDOMAIN (which is probably also incomprehensible to the majority of users). Of course you would have to consider carefully how to implement that because not all DNS queries arise from web browsing.

Secondly, and because I really like standards, why not consider Quad9 drafting a new RFC on “Filtered DNS”. You are in a perfect position to influence any new standard and you could then point to it. At the moment, what you do actually abuses the existing RFCs.

Thirdly, a question if I may. I read in an article in ArsTechnica an interview with Adnan Baykal, GCA’s Chief Technical Advisor and Phil Rettinger, GCA’s president and chief operating officer, that Quad9 operates both a “white list” and a “gold list” of entire domains never to block, regardless of whether those domains might host malware. Examples of the gold list given were Microsoft, Google and Amazon (despite the fact that Microsoft’s Azure cloud, Amazon’s AWS and Google’s docs are known to be sources of malware). I can see why you would do that (not least because if you did block them you would have their lawyers all over you) but this begs a question about how you apply your policy blocks to domains not on either a white list or the gold list. If a domain appears in a feed from one of your threat intelligence partners (say “domain.com”) do you then return NXDOMAIN solely on the A record for that domain (and its subdomain “www.domain.com”) or do you return NXDOMAIN for all records in the domain? If the latter, then that would necessarily mean that no MX record would be returned and all mail from users of your service to that domain would fail, and worse, the owner of that domain would never know. That does not strike me as at all reasonable.

M