OpenVPN is a free, open source, general purpose VPN tool which allows users to build secure tunnels through insecure networks such as the internet. It is the ideal solution to a wide range of secure tunnelling requirements, but it is not always immediately obvious how it should be deployed in some circumstances.

Recently, a correspondent on the Anglia Linux User Group (ALUG) mailing list posed a question which at first sight seemed easy to answer. He wanted to connect from one internet connected system to another which was behind a NAT firewall (actually, it turned out to be behind two NAT firewalls, one of which he didn’t control, and therein lay the difficulty).

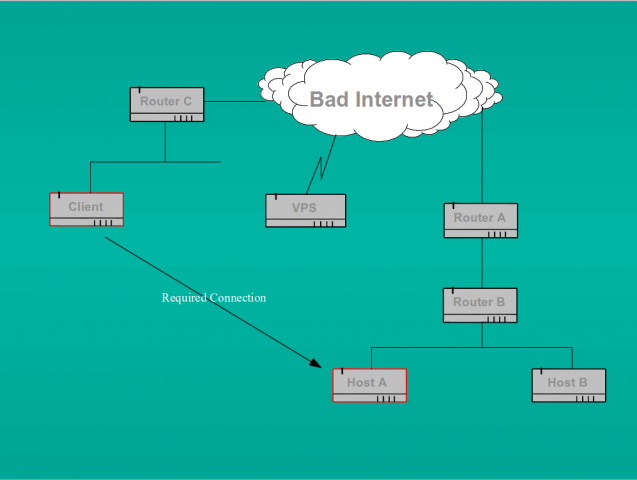

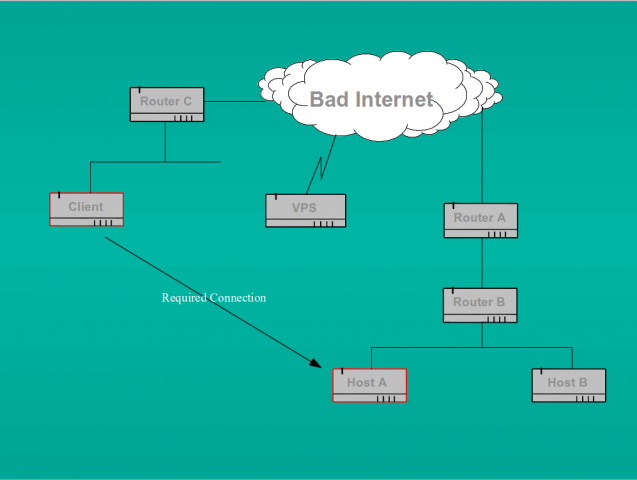

The scenario was something like this:

He wanted to connect from the system called “Client” in the network on the left to the secured system called “Host A” on the network on the right of the diagram. We can assume that both networks use RFC 1918 reserved addresses and that both are behind NAT firewalls (so routers A and C at the least are doing NAT).

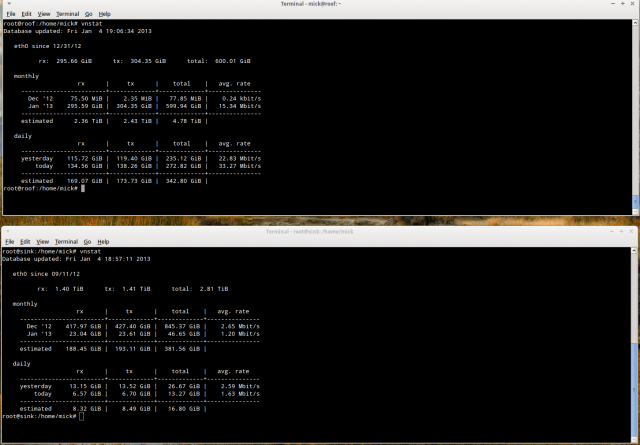

Ordinarily, this would be pretty straightforward. All we have to do is run an SSH daemon (or indeed openVPN) on Host A and set up port forwarding rules on routers A and B to forward the connection to the host. So long as we have appropriate firewall rules on both the host and the routers, and the SSH/OpenVPN daemons are well configured we can be reasonably confident that the inbound connection is secure (for some definition of “secure”). This is exactly the setup I use on my home network. I have an openVPN server running on one of my debian systems. When out and about I can connect to my home network securely over that VPN from my netbook.

However, as I noted above, the problem in this scenario is that the owner of Host A did not control the outermost NAT device (Router A) so could not set up the necessary rule. Here is where openVPN’s flexibility comes in. Given that both networks are internet connected, and can make outbound connections with little difficulty, all we need to do is set up an intermediary openVPN host somewhere on the net. A cheap VPS is the obvious solution here (and is the one I used). Here’s how to accomplish what we want:

- install openVPN server on the VPS;

- install openVPN client on Host A;

- set up the openVPN tunnel from Host A to the VPS;

- connect over SSH from Client to VPS;

- connect (using SSH again) over the openVPN tunnel from the VPS to Host A.

Using SSH over an already encrypted tunnel may seem like overkill, but it has the advantage that we can leverage the existing security mechanisms on Host A (and we really don’t want a telnet daemon listening there).

Installing openVPN

There are already a huge range of openVPN “HowTos” out there so I won’t add to that list here. The very comprehensive official HowTo on the openVPN website lists all that you need to know to install and configure openVPN to meet a wide variety of needs, but it can be a bit daunting to newcomers. OpenVPN has a multitude of configuration options so it is probably best to follow one of the smaller, distro specific, howtos instead. Two I have found most useful are for debian and arch. And of course, Martin Brooks, one of my fellow LUGgers has written quite a nice guide too. Note, however, that Martin’s configuration does not use client side certificates as I do here.

By way of example, the server and client configuration files I built when I was testing this setup are given below. Note that I used the PKI architectural model, not the simpler static keys approach. As the main howto points out, the static key approach doesn’t scale well, is not as secure as we’d like (keys must be stored in plain text on the server), but most importantly, it doesn’t give us perfect forward secrecy so any key compromise would result in complete disclosure of all previous encrypted sessions. Note also that I chose to change the keysize ($KEY_SIZE) in the file “vars” to 2048 from the default 1024. If you do this, the build of the CA certificate and server and client keys warns you that the build “may take a long time”. In fact, on a system with even quite limited resources, this only takes a minute or two. Of course, it should go without saying that the build process should be done on a system which is a secure as you can make it and which gives you a secure channel for passing keys around afterwards. There is little point in using a VPN for which the keys have been compromised. It is also worth ensuring that the root CA key (“ca.key” used for signing certificates) is stored securely away from the server. So if you build the CA and server/client certificates on the server itself, make sure that you copy the ca key securely to another location and delete it from the server. It doesn’t need to be there. I chose /not/ to add passwords to the client certificates because the client I was testing from (emulating Host A) is already well secured (!). In reality, however, it is likely that you would wish to strengthen the security of the client by insisting on passwords.

server.conf on the VPS

# openvpn server conf file for VPS intermediary

# Which local IP address should OpenVPN listen on (this should be the public IP address of the VPS)

local XXX.XXX.XXX.XXX

# use the default port. Note that we firewall this on the VPS so that only the public IP address of the

# the network hosting “Host A” (i.e. the public address of “Router A”) is allowed to connect. Yes this exposes

# the VPN server to the rest of the network behend Router A, but it is a smaller set than the whole internet.

port 1194

# and use UDP as the transport because it is slightly more efficient than tcp, particularly when routing.

# I don’t see how tcp over tcp can be efficient.

proto udp

# and a tun device because we are routing not bridging (we could use a tap, but it is not necessary when routing)

dev tun

# key details. Note that here we built 2048 bit diffie hellman keys, not the default 1024 (edit $KEY_SIZE in the

# file “vars” before build)

ca /etc/openvpn/keys/ca.crt

dh /etc/openvpn/keys/dh2048.pem

cert /etc/openvpn/keys/vps-server-name.crt

key /etc/openvpn/keys/vps-server-name.key

# now use tls-auth HMAC signature to give us an additional level of security.

# Note that the parameter “0” here must be matched byy “1” at the client end

tls-auth /etc/openvpn/keys/ta.key 0

# Configure server mode and supply a VPN subnet for OpenVPN to draw client addresses from.

# Our server will be on 172.16.10.1. Be careful here to choose an RFC1918 network which will not clash with

# that in use at the client end (or Host A in our scenario)

server 172.16.10.0 255.255.255.0

# Maintain a record of client virtual IP address associations in this file. If OpenVPN goes down or is restarted,

# reconnecting clients can be assigned the same virtual IP address from the pool that was previously assigned.

ifconfig-pool-persist ipp.txt

# We do /not/ push routes to the client because we are on a public network, not a reserved internal net.

# The default configuration file allows for this in the (example) stanzas below (commented out here)

# push “route 192.168.1.0 255.255.255.0”

# push “route 192.168.2.0 255.255.255.0”

# Nor do we tell the client (Host A) to redirect all its traffic through the VPN (as could be done). The purpose of this

# server is to allow us to reach a firewalled “client” on a protected network. These directives /would/ be useful if we

# wanted to use the VPS as a proxy to the outside world.

# push “redirect-gateway def1”

# push “dhcp-option DNS XXX.XXX.XXX.XXX”

# Nor do we want different clients to be able to see each other. So this remains commented out.

# client-to-client

# Check that both ends are up by “pinging” every 10 seconds. Assume that remote peer is down if no ping

# received during a 120 second time period.

keepalive 10 120

# The cryptographic cipher we are using. Blowfish is the default. We must, of course, use the same cipher at each end.

cipher BF-CBC

# Use compression over the link. Again, the client (Host A) must do the same.

comp-lzo

# We can usefully limit the number of allowed connections to 1 here.

max-clients 1

# Drop root privileges immediately and run as unpriviliged user/group

user nobody

group nogroup

# Try to preserve some state across restarts.

persist-key

persist-tun

# keep a log of the status of connections. This can be particularly helpful during the testing stage. It can also

# be used to check the IP address of the far end client (Host A in our case). Look for lines like this:

#

# Virtual Address,Common Name,Real Address,Last Ref

# 172.16.10.6,client-name,XXX.XXX.XXX.XXX:nnnnn,Thu Oct 25 18:24:18 2012

#

# where “client name” is the name of the client configuration, XXX.XXX.XXX.XXX:nnnnn is the public IP address of the

# client (or Router A) and port number of the connection. This means that the actual client address we have a connection

# to is 172.16.10.6. We need this address when connecting from the VPS to Host A.

status /var/log/openvpn-status.log

# log our activity to this file in append mode

log-append /var/log/openvpn.log

# Set the appropriate level of log file verbosity

verb 3

# Silence repeating messages. At most 20 sequential messages of the same message category will be output to the log.

mute 20

# end of server configuration

Now the client end.

client.conf on Host A

# client side openvpn conf file

# we are a client

client

# and we are using a tun interface to match the server

dev tun

# and similarly tunneling over udp

proto udp

# the ip address (and port used) of the VPS server

remote XXX.XXX.XXX.XXX 1194

# if we specify the remote server by name, rather than by IP address as we have done here, then this

# directive can be useful since it tells the client to keep on trying to resolve the address.

# Not really necessary in our case, but harmless to leave it in.

resolv-retry infinite

# pick a local port number at random rather than bind to a specific port.

nobind

# drop all priviliges

user nobody

group nogroup

# preserve state

persist-key

persist-tun

# stop warnings about duplicate packets

mute-replay-warnings

# now the SSL/TLS parameters. First the server then our client details.

ca /home/client/.openvpn/keys/server.ca.crt

cert /home/cient/.openvpn/keys/client.crt

key /home/client/.openvpn/keys/client.key

# now add tls auth HMAC signature to give us additional security.

# Note that the parameter to ta.key is “1” to match the “0” at the server end.

tls-auth /home/client/.openvpn/keys/ta.key 1

# Verify server certificate by checking.

remote-cert-tls server

# use the same crypto cypher as the server

cipher BF-CBC

# and also use compression over the link to match the server.

comp-lzo

# and keep local logs

status /var/log/openvpn-status.log

log-append /var/log/openvpn.log

verb 3

mute 20

# end

Tunneling from Host A to the VPS.

Now that we have completed configuration files for both server and client, we can try setting up the tunnel from “Host A”. In order to do so, we must, of course, have openVPN installed on the Host A and we must have copied the required keys and certificates to the directory specified in the client configuration file.

At the server end, we must ensure that we can forward packets over the tun interface to/from the eth0 interface. Check that “/proc/sys/net/ipv4/ip_forward” is set to “1”, if it is not, then (as root) do “echo 1 > /proc/sys/net/ipv4/ip_forward” and then ensure this is made permanent by uncommenting the line “net.ipv4.ip_forward=1″ in /etc/sysctl.conf”. We also need to ensure that neither the server, nor the client block traffic over the VPN. According to the openVPN website, over 90% of all connectivity problems with openVPN are caused not by configuration problems in the tool itself, but by firewall rules.

If you have an iptables script on the server such as I use, then ensure that:

- the VPN port is open to connections from the public address of Host A (actually Router A in the diagram);

- you have modified the anti-spoof rules which would otherwise block traffic from RFC 1918 networks;

- you allow forwarding over the tun interface.

The last point can be covered if you add rules like:

$IPTABLES -A INPUT -i tun0 -j ACCEPT

$IPTABLES -A OUTPUT -o tun0 -j ACCEPT

$IPTABLES -A FORWARD -o tun0 -j ACCEPT

This would allow all traffic over the tunnel. Once we know it works, we can modify the rules to restrict traffic to only those addresses we trust. If you have a tight ruleset which only permits ICMP to/from the eth0 IP address on the VPS, then you may wish to modify that to allow it to/from the tun0 address as well or testing may be difficult.

Many “howtos” out there also suggest that you should add a NAT masquerade rule of the form:

“$IPTABLES -t nat -A POSTROUTING -s 172.16.10.0/24 -o eth0 -j MASQUERADE”

to allow traffic from the tunnel out to the wider network (or the internet if the server is so connected). We do not need to do that here because we are simply setting up a mechanism to allow connection through the VPS to Host A and we can use the VPN assigned addresses to do that.

Having modified the server end, we must make similarly appropriate modifications to any firewall rules at the client end before testing. Once that is complete, we can start openVPN at the server. I like to do this using the init.d run control script whilst I run a tail -f on the log file to watch progress. Watch for lines like “TUN/TAP device tun0 opened” and “Initialization Sequence Completed” for signs of success. We can then check that the tun interface is up with ifconfig, or ip addr. Given the configuration used in the server file above, we should see that the tun0 interface is up and has been assigned address 172.16.10.1.

Now to set up the tunnel from the client end, we can run (as root) “openvpn client.conf” in one terminal window and we can then check in another window that the tun0 interface is up and has been assigned an appropriate IP address. In our case that turns out to be 172.16.10.6. It should now be possible to ping 172.16.10.1 from the client and 172.16.10.6 from the server.

Connecting over SSH from Client to VPS.

We should already be doing this! I am assuming that all configuration on the VPS has been done over an SSH connection from “Client” in the diagram. But the SSH daemon on the VPS may be configured in such a way that it will not permit connections over the VPN from “Host A”. Check (with netstat) that the daemon is listening on all network addresses rather than just the public IP address assigned to eth0. If it is not, then you will need to modify the /etc/ssh/sshd_config file to ensure that “ListenAddress” is set to “0.0.0.0”. And if you limit connections to the SSH daemon with something like tcpwrappers, then check that the /etc/hosts.allow and /etc/hosts.deny files will actually permit connections to the daemon listening on 172.16.10.1. Once we are convinced that the server SSH configuration is correct, we can try a connection from the Host A to 172.16.10.1. If it all works, we can move on to configuring Host A.

Connect (using SSH again) over the openVPN tunnel from the VPS to Host A.

This is the crucial connection and is why we have gone to all this trouble in the first place. We must ensure that Host A is running an SSH daemon, configured to allow connections in over the tunnel from the server with address 172.16.10.1. So we need to make the same checks as we have undertaken on the VPS. Once we have this correctly configured we can connect over SSH from the server at 172.16.10.1 to Host A listening on 172.16.10.6.

Job done.

Addendum: 26 March 2013. For an alternative mechanism to achieve the same ends, see my later post describing ssh tunnelling.