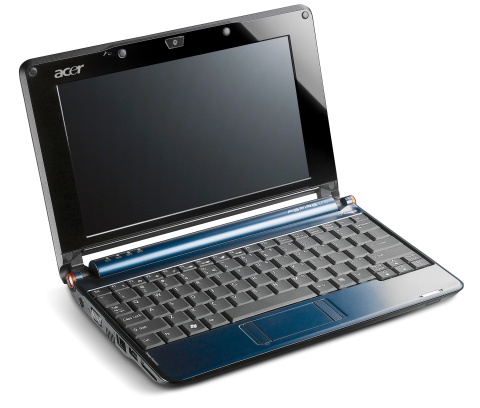

I mentioned in an earlier post that I had recently acquired an Acer Aspire One (AAO) netbook. I chose the AAO in preference to any other of the netbooks on the market for two reasons – firstly it looks a lot cooler than most of the competition (particularly in blue – see below), but secondly, and most importantly, the price was excellent. Apparently many people are buying the AAO with Linpus installed only to find that the machine is not compatible with Microsoft software – either that, or they are just not comfortable enough with an unfamiliar user interface to persevere. This is a shame. Linpus seems to have gained a reputation as “Fisher Price Computing” or “My First Computer”. In my view this is unfair. It does exactly what it says on the tin. The default Linpus install boots quickly, connects with both wired and wireless networks with ease and provides all that most users could want from a netbook – i.e. web browsing, email, chat and word processing.

Whatever the reasons for the returns, this means that there are a fair number of perfectly good AAOs coming back to Acer. Acer in turn is punting those machines back on to the market through various resellers as “refurbished”. Whilst I may be disappointed at the lack of engagement of the buying public with a perfectly usable linux based netbook, from a purely selfish viewpoint this means that I got hold of an excellent machine at well below the original market price. My machine is the AOA 150-Ab ZG5 model. This has the 1.6 GHz N270 atom processor, 1 GB DDR2 RAM and a 120 GB fixed disk. Not so very long ago a machine with that sort of specification (processor notwithstanding) would have been priced at close to £500. I got mine for under £190 including delivery. An astounding bargain.

To be frank, I didn’t really need a netbook, but I’m a gadget freak and I couldn’t resist the added functionality offered by the AAO over my Nokia N800 internet tablet. The addition of a useable keyboard, 120 Gig of storage, and a decent screen in a package weighing just over a kilo means that I can carry the AAO in circumstances where I wouldn’t bother with a conventional laptop. And whilst the N800 is really useful for casual browsing, the screen is too small for comfort, and ironically, I stll prefer my PSP for watching movies on the move. So the N800 hasn’t had the usage I expected.

There are plenty of reviews of the AAO out there already, so I won’t add much here. This post is mainly about my experience in changing the default linux install for one I find more useful. As I said above, Linpus is perfectly usable, but the configuration built for the AAO is aimed at the casual user wth no previous linux experience. Most linux users dump Linpus and install their preferred distro. Indeed, there is a very active community out there discussing (in typical reserved fashion) the pros and cons of a wide variety of distros. It is relatively simple to “unlock” the default Linpus installation to gain access to the full functionality offered by that distribution, but Linpus is based on a (fairly old) version of Fedora and I much prefer the debian way of doing things. So for me, it was simply a choice between debian itself, or one of the ubuntu based distros.

I run debian on my servers (and slugs) but ubuntu on my desktops and laptops so ubuntu seemed to be the obvious way to go. Some quick research led me to the debian AAO wiki which gives some excellent advice which is applicable to all debian (and hence ubuntu) based installations. Whilst this wiki is typically thorough in the debian way, it does make installation look difficult and the sections on memory stick usage, audio and the touchpad are not encouraging for the faint hearted. I was particularly disappointed at the advice to blacklist the memory stick modules because I actually want to use sony memory sticks (remember my PSP….).

The best resource I found, and the one that I eventually relied upon for a couple of different installations was the ubuntu community page. This page is being actively updated and now offers probably the best set of advice for anyone wishing to install an ubuntu derivative on any of the AAO models.

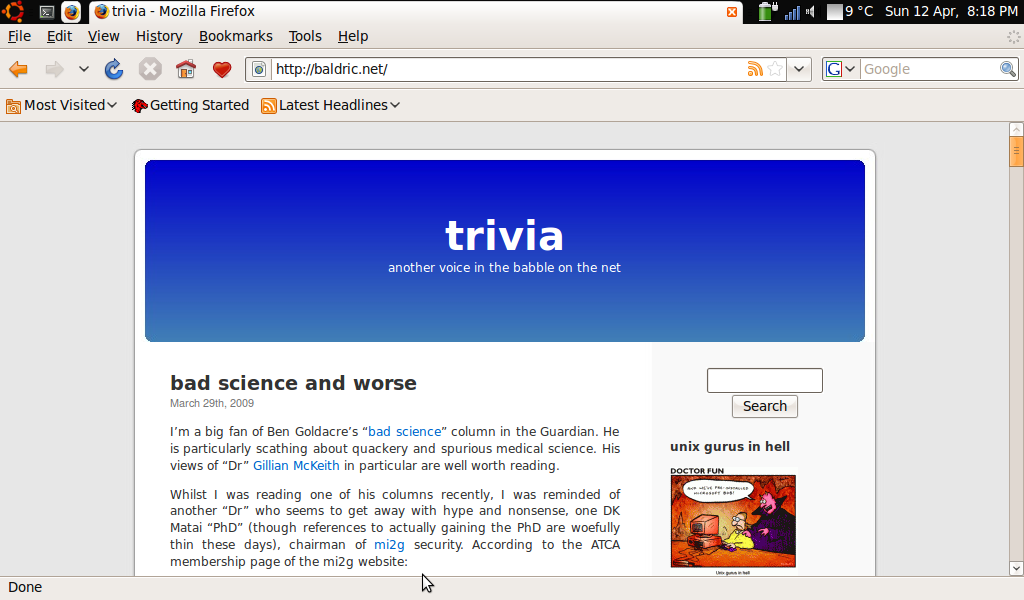

So, having played with Linpus for all of about two days, I dumped it in favour of xubuntu 8.10. I chose that distro because it uses the xfce window manager which is satisfactorily lightweight and fast on small machines (and because the default theme is blue and looks really cool on a blue AAO – see my screenshot below).

By following the advice on the ubuntu community help page for 8.10 installs, I managed to get a reasonably fast, functional and attractive desktop which made the most of the (admittedly cramped) AAO screen layout. I had some trouble with the default ath_pci wireless module (as is documented) so I opted for the madwifi drivers which worked perfectly. The only functions I failed to get working successfully remained the sony memory sticks in the RHS card reader (SD cards worked fine) and the internal microphone.

Further searching led me to the kuki linux site which gives as its objective “a fully working, out of the box replacement for Linpus on the AAO”. I like the objective, but not the distro. However, that distro uses a kernel compiled by “sickboy” which promised to offer full functionality. I tried that kernel with my xubuntu installation (with madwifi wireless drivers) and indeed everything worked – except the sony memory sticks. So I decided to see what else I could do.

By now, I had been using the xubuntu installation for about three or four weeks. However, a fresh visit to the ubuntu community site led me to consider testing jaunty in a “netbook remix” form. I had earlier dismissed this option at intrepid (8.10) because it looked too flakey and seemed like an afterthought rather than a well considered desktop build. I was pleasantly surprised at the look of the “live” installation of the beta of jaunty-nr so decided to give that a go for real. Jaunty comes with kernel 2.6.28.11 which is pretty much up to date and I guessed that playing with that might give me the complete distro I wanted. I was also quite taken with the desktop itself which makes the most of the limited AAO screen real estate by dispensing with traditional gnome panels and virtual desktops and offering a “tabbed” application layout akin to that used in maemo on the Nokia N800. So, following the instructions on the UNR wiki, I downloaded the latest daily snapshot from the cd-image site, and made a new USB install stick. (Note that it is worth using decent branded USB sticks here, I had no problem with a 4 Gig Kingston stick, but an unbranded freebie I tried was useless both here and in my earlier installations.) My newly installed desktop looked as below.

(Not blue, so not quite so cool, but hey.)

Note that the top panel only shows some limited information (battery and wireless connection status, time/date etc) whilst the left side of the screen shows menu options which would traditionally be given as drop down options from a toolbar and the right hand side of the screen is taken up by what would normally be called “places” (directories etc) on a standard ubuntu desktop. The centre of the screen gives the icons for the applications in the currently highlighted left side menu option. The overall effect is quite attractive and very easy to read. Selecting any application opens that application in full screen mode. Opening several applications leaves you with the latest application at the front and the others available as icons on the top panel. See my desktop below with firefox open as an example.

The completed installation with the default kernel does not allow for pci hotplugging and the RHS card reader doesn’t work. This is a retrograde step, but conversely, wireless worked properly with the atheros ath5k module (not the ath_pci module) in my snapshot. Earlier snaphots included a kernel in which the acer_wmi module had to be blacklisted because it conflicted with the ath5k wireless module. The fix for the pci hotplug problem involved passing “pciehp.pciehp_force=1” as an option to the kernel at boot time. Whilst this fixed the RHS card reader failure, I still couldn’t get the damned reader to recognise my memory sticks.

So having found a distro I really like and can probably live with long term on my AAO, I need to address the remaining problems. Given the range of problems I was facing whichever distro I chose, I decided to bite the bullet and compile my own kernel. It was clear to me that the main problems with wireless were conflicting modules so it seemed obviously better to build a kernel with only the modules required rather than include modules which only had to be blacklisted. Similarly, the requirement to pass boot time parameters to the kernel meant that pci hotplug support and related code wasn’t modularised properly. It is pretty difficult to load and unload kernel modules which don’t exist.

It is some time since I last built a linux kernel (I think it was round about 1999 or 2000 on a redhat box) so I had to spend a fun few hours getting reacquainted with the necessary tools. Unfortunately there is a lot of old and conflicting advice around on the net about how best to do this nowadays – certainly the old “make”, “make_modules install”, “make install” routine doesn’t work these days. And mkinitrd seems long gone….. Unusually, the debian wiki site wasn’t as helpful as I expected but the ubuntu community came good again and the kernel compile advice is pretty good and reasonably up to date. Even here though, there are a couple of mistakes which gave me cause to stop and think. So, as an aide-memoire (largely for myself) I documented the steps I followed to get a kernel build for .deb installation images.

For my kernel build I took the latest available stable kernel from kernel.org (2.6.29.1) and used as a starting point the kernel config from jaunty-nr (i.e. the config for 2.6.28-11 as shipped by canonical). The standard ubuntu kernel is highly generic (and as I have found, hugely and unnecessarily bloated with unused options, debug code and unnecessary modules. I may now rebuild the kernels on my standard desktops too.) To some extent this bloat is inevitable in a kernel which is aimed at a wide range of target architectures. Canonical have also done an excellent job of ensuring that practically any kernel module you could ask for is available should you plug in that weird USB device or install a PCI card giving some obscure capability that only 5% of users will ever need. But I want a kernel which works for a particular piece of hardware, and works well on that hardware. In particular I want my sony memory sticks to be available!

In configuring my kernel I took out all the obviously unnecessary code – stuff like support for specific hardware not in the AAO (AMD or VIA chips, parallel ports, Dell and Toshiba laptops etc. etc), old and unnecessary networking code (apple, decnet, IPX etc. etc) or network devices (token ring (!) ISDN etc. etc.) sheesh there is some cruft in there. More importantly I made sure that I built pci hotplug as a module (pciehp) and that the memory stick modules (mspro_block, jmb38x_ms and memstick) were built correctly. I also checked my config against sickboy’s (to whom I am indebted for some pointers when I was unsure of what to leave out). Sickboy has been quite brutal in excluding support for some devices I feel might be useful (largely in attached USB systems) so we differ. I feel that my kernel is still too large and could do with some pruning. But I’ve been conservative where I’m not sure of the impact of stripping out code.

My kernel now works and supports all the hardware in the AAO (well, my AAO as specified above). The only modifications required to get pci hotplug working are:

– add “pciehp” (without the quotes) to the end of /etc/modules to get the module to load.

– create a new file in /etc/modprobe.d (I called mine acer-aspire.conf) to include the line “options pciehp pciehp_force=1” (again without the quotes).

This means that both card readers work and are hot pluggable as are all the USB ports. Wireless works fine, and it restarts after hibernate/suspend. Audio and the webcam also both work fine (though the internal microphone is still crackly, I get better results from the external mike) . In general I’m fairly happy with the kernel build. I have posted copies of the .deb kernel image and headers here along with my config if anyone wants to try it out. I’d be grateful for any and all feedback on how it works with various other AAO configs. You can email about this at “acer[at]baldric.net“. I think the kernel should work on any AAO running debian lenny or an ubuntu distro of 8.04 or later vintage (but obviously I haven’t tested that fully). Potential users should note that both my kernel and sickboy’s omit support for IPV6 amongst other things. If you find you have a particular problem (say a missing kernel module when you plug in a USB gadget), please check the config file first. It is almost certain that I will have omitted module support for your okey-cokey 2000 USB widget.

To install the downloaded kernel package, either open it with the gdebi package installer or, from the command line, type:

dpkg -i /path/to/dowlnload/linux-image-2.6.29.1-baldric-0.7_2.6.29.1-baldric-0.7-10.00.Custom_i386.deb

(where /path/to/download/ obviously is the path to the directory containing the kernel package).

The package installer will modify your /boot/grub/menu.lst appropriately to allow you to select the new kernel. By default, your machine will boot into the new kernel so you may wish to modify the grub menu to allow you to choose which kernel to boot. I suggest you ensure that “##hiddenmenu” is actually commented out and that you set timeout to a minimum of 3 seconds to give you a chance to choose the appropriate kernel.

I have made every effort to ensure that the kernel works as described, but as always, caveat emptor. If it breaks, you get to keep the pieces. So you really need to enure that you can boot back into a known good kernel before you boot into mine.

Enjoy.

I would be particularly grateful to hear from anyone who has managed to get sony memory sticks to work. Despite everything I have tried (all the modules load correctly) the bloody things still don’t work on my machine.