I have recently been off-line. And I am less than happy about the reasons.

My ISP recently informed me that it was changing it’s back end provider from Entanet to Vispa. Like many small ISPs, my provider does not have any real infrastructure of its own, it simply repackages services provided by a wholesaler who does have the necessary infrastructure in a process commonly called “whitelabelling”. This whitelabel approach is particularly common amongst providers of webspace and it normally works fine. Amongst the smaller ISPs there are many who are simply Entanet resellers. And until recently Entanet had a good name for pretty solid service. Well not any more.

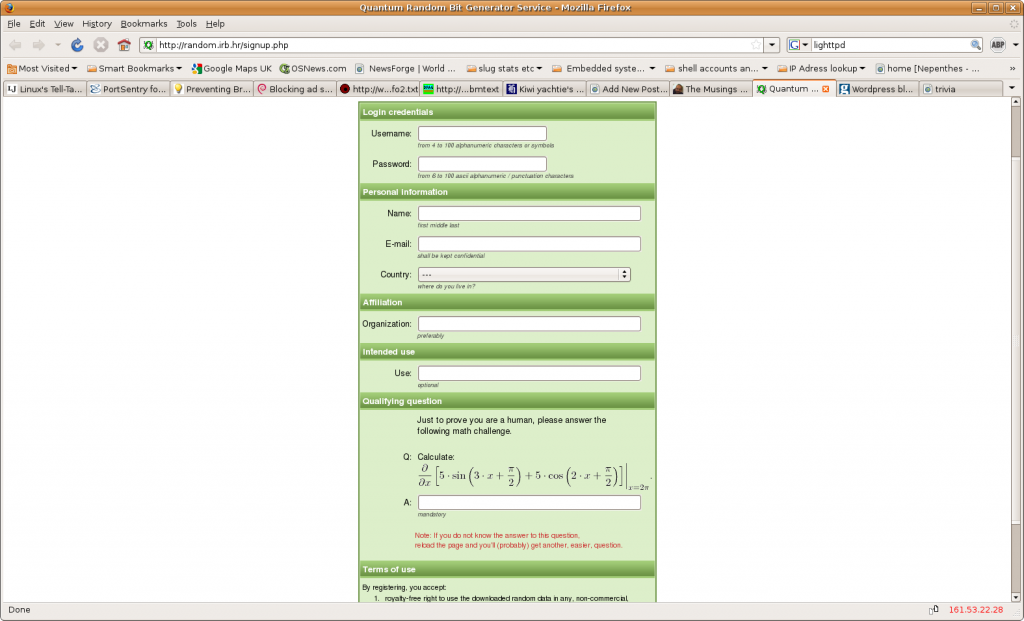

I had not noticed any particular problems and was slightly surprised to hear from my ISP that they were unhappy with the service they were getting from Entanet. Apparently there had been frequent network outages for many of their customers and so they had chosen a new provider and were notifying their customers of impending moves, Of course this would mean some local configuration changes so customers were advised in advance of those changes and the dates for action. Apart from preparing to change the ADSL login details on my router, in my case I also had to ensure that my SSH and other login details on various external services I have or use were modified to accept the new fixed IP address assigned to my router (I tend to lock down such services so that they only accept connections from my IP address, not foolproof I know, but it all helps).

In the migration advice letter, my ISP advised its customers to set up new direct debit arrangements for Vispa and cancel the existing ones to Entanet. That letter advised that any over or under charge either way during transition would be sorted out between the providers. So I did as I was advised and waited for the big day (approximately 10 days away). Big mistake.

About a week before the date of transition I found my web traffic intercepted and blocked by Entanet with the message “Your account has been blocked. Please contact your internet service provider”. This blockage only occurred on web traffic (my email collection over POP3S and IMAPS continued to work, as did ICMP echo requests and ssh connections out). This action actually pissed me off even more than I would have been if Entanet had completely cut my connection. It also, incidentally, betrayed the fact that they were using a transparent web proxy on the connection – not something that makes me very happy. But simply blocking web traffic was obviously designed to annoy me and make me contact my ISP and strongly suggests to me that Entanet were usnsure of their legal right to cut me off completely. Further, in my view, intercepting my web traffic in this way may actually have been illegal.

Interestingly, even http traffic aimed inbound to my ADSL line (where I run a webcam on one of my slugs) was similarly intercepted as is evidenced by this link from changedetection.com. Obviously, the imposition of the message from Entanet was picked up by changedetection as an actual change to that web page.

So I emailed Entanet and my ISP, pointing out that my contract was with them and not Entanet and told them to sort it out between themselves. I, as a customer, did not expect to be penalised simply because my ISP had decided to change its wholesaler. Meanwhile, I decided to bypass Entanet’s pathetic and hugely irritating web block by tunneling out to a proxy of my own. Of course I could have used my existing tor connection, but that is not always as fast as I would like, particularly at peak web usage hours, so I set up a new proxy on another of my VPSs using tinyproxy, listening on localhost 8118 (the same as privoxy on my tor node). I then set up an ssh listener on my local machine and set firefox to use that listener as its proxy – again, much as I had for tor. Bingo. Stuff you Entanet.

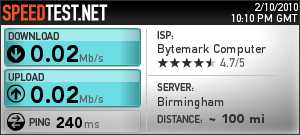

Unfortunately, it did not stop there. Entanet’s rather arrogant response to my email was to insist that I re-establish a direct debit with them for the few days remaining before the changeover (despite them having had my payment in advance for the month in question). No way, so I ignored this request only to find that Entanet then throttled my connection to 0.02 Mb/s – see the speedtest result below.

This sort of speed is just about usable for text only email, but is absolutely useless for much else. Now I had originally been given two separate dates for the changeover by my ISP, so in a fit of over enthusiastic optimism on my part, I tried to convince myself that the earlier (later corrected) date given was the correct one and so I reconfigured my router in the hope it would connect to Vispa, No deal. Worse, when I then tried to fall back to the (pitiful) Entanet connection, I found it blocked completely. I was thus without a connection for some four days (including a very long weekend).

So far my new connection looks good. But apart from my disgust with Entanet, I have not been overly impressed with the support I have received from my ISP during these problems. I’ll keep an eye on things – I may yet move of my own volition.

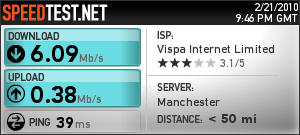

[Addendum] just by way of comparison, the test result below is what I expect my connection speed to look like. Test run at around 21.45 on Sunday 21 February 2010.

That’s a bit better. Note however that this test was direct from Vispa’s network rather than through my ssh tunnel.