As I have mentioned in other posts here, I run my own mail server on one of my VMs. I do this for a variety of reasons, but the main one is that I like to control my own network destiny. Back in October last year I noticed an interesting change in my mail experience with my HTC mobile (actually my wife first noticed it and blamed me, assuming that I had “twiddled with something” as she put it). Heaven forfend.

My mail setup is postfix/dovecot with SASL authentication and TLS protecting the mail authentication exchange. My X509 certs are self generated (and so not signed by any CA). I pick up mail over IMAPS (when mobile) and POP3S (at home – for perverse reasons of history I like to actually download mail to my main desktop over POP3 and archive it to two separate NAS backups). I send via the standard SMTP port 25 but require authentication and protect the exchange with TLS.

My mail had been working fine ever since I set it up some years ago, but as I said, back in October my wife complained that she could no longer send email from her HTC mobile (we both use t-mobile as the network provider). She was at work at the time so away from my home network. Both our phones are setup to use use wifi for connectivity where it is available (as it is at home of course). When my wife complained I checked my phone and it could send and receive without problem. But when I switched wifi off, thus forcing the data connection though the mobile network, I got the same problem as my wife reported. On checking my mail server logs I read this:

postfix/smtpd[28089]: connect from unknown[149.254.186.120]

postfix/smtpd[28089]: warning: network_biopair_interop: error reading 11 bytes from the network: Connection reset by peer

postfix/smtpd[28089]: SSL_accept error from unknown[149.254.186.120]:-1

postfix/smtpd[28089]: lost connection after STARTTLS from unknown[149.254.186.120]

postfix/smtpd[28089]: disconnect from unknown[149.254.186.120]

(the ip address is one of t-mobile’s servers on their “TMUK-WBR-N2” network)

Everything I could find about that sort of message suggested that the client was tearing down the connection because there was something wrong with the TLS handshake and it was not trusted. Checking earlier logs, I found that t-mobile’s address had apparently changed (to the address above) recently. So I assumed that some recent network change following the Orange/T-mobile merger had been badly managed and all would be well again as soon as the problem was spotted. Wrong. It persisted. So I had to investigate further. As part of my investigation of the error, I tried moving mail from port 25 to 587 (submission) because that sometimes gets around the problem of ISPs blocking, or otherwise interfering, with outbound connections from their networks to port 25, No deal. In fact it looked as if t-mobile were blocking all connections to port 587 (I assumed a whitelisting policy block, or again, a cockup).

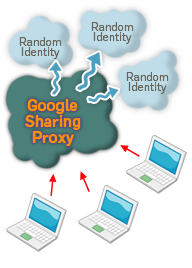

So, the scenario was: mail works when connecting over wifi and using my domestic ISP’s network, but doesn’t when using t-mobile’s 3G network. Symptoms point to a lack of trust in the TLS handshake. Tentative conclusion? There is an SSL/TLS proxy somewhere in the mobile operator’s chain. That proxy sucessfully negotiates with our phones, but when it gets my self certified X509 cert from the server. it can’t authenticate it and decides that the connection is untrusted so tears it down. My server sees this as the client (my phone) tearing down the connection. [As it turns out, this conclusion was completely wrong, but hey].

I said in an email at the time to a friend whose advice I was seeking, “I suspect cockup rather than outright conspiracy, but if my telco is dumb enough to stick a MITM ssl proxy in my mail chain, they really ought to have thought about handling self signed certs a little better. Otherwise it sort of gives the game away.”

In response, he very sensibly suggested that I should run a sniffer on the server and check what was going on. At that time, I was busy doing something else so I didn’t. And because the problem was intermittent (and my wife stopped complaining) I never got around to properly investigating further. (I should explain that I rarely send mail from my mobile nowadays. I just read mail there and wait until I get home to a decent keyboard and can reply to whatever needs handling from there. My wife just gave up bothering to try).

I should have persisted because of course I wasn’t the only one to experience this problem.

Back in November, a member of the t-mobile discussion forum called “dpg” posted a message complaining that he could not connect to port 587 over t-mobile’s 3G network. In response, a member of the t-mobile forum team suggested that dpg might reconfigure his email so that it was relayed via t-mobile’s own SMTP server. Not unreasonably, dpg didn’t think this was an acceptable response – not least because he would then have to send his email in clear. He then posted again saying that “the TLS handshake fails when the mail client receives a TCP packet with the reset (RST) flag set.” (This is a bad thing (TM). Further, he posted again saying that he had set up his own mail server and repeated earlier tests so that he could see both ends of the connection. At the client side he posted mail from his laptop tethered to his phone which was connected to the t-mobile 3G network. By running sniffers at both ends of the connection he was able to prove to his own satisfaction that something in the t-mobile network was sending a RST and tearing down any connection when a STARTTLS was seen. Again, in a later post in response to one from another poster who apparently manages several mail servers and had been looking at the same issue for a client, dpg says:

“I must say I’m not too pleased to discover that T-Mobile may be snooping all traffic to check for SMTP messages. I have demonstrated that they may be doing this by running a SMTP server on a non-standard port and finding that they still sent TCP reset packets during TLS negotiation – so they must be examining all packets and not just those destined for TCP ports 25 and 587.

I’m also not that keen on T-Mobile spoofing/forging TCP resets. This is the sort of tactic resorted to by the Great Firewall of China (https://www.lightbluetouchpaper.org/2006/06/27/ignoring-the-great-firewall-of-china/) and also by Comcast back in 2007 (https://www.eff.org/wp/packet-forgery-isps-report-comcast-affair) until the US FCC told them to stop (https://hraunfoss.fcc.gov/edocs_public/attachmatch/FCC-08-183A1.pdf).”

Then 9 days ago, dpg posted this message:

“I finally got to the bottom of this. I was contacted by T-Mobile technical support today and was told that they are now actively looking for and blocking any TLS-secured SMTP sessions. So, it is a deliberate policy after all, despite what the support staff have been saying on here, twitter and on 150. They told me it is something they have been rolling out over the last three months – which explains why it was intermittent and dependent on IP address and APN to begin with.

So, the only options for sending email over T-Mobile’s network are:

– unencrypted but authenticated SMTP (usually on port 25)

– SSL-encrypted SMTP (usually on port 465)

– unauthenticated and unencrypted email to smtp.t-email.co.uk

TLS-encrypted SMTP sessions are always blocked whether or not they are on the default port of 587.”

(As an aside, there is, of course, another alternative. You can ditch t-mobile as your provider and pick one which doesn’t use DPI to screw your connections. You pays your money….)

Following this, a new poster called “mickeyc” said this:

“I’ve been experiencing this exact same problem. I run my own mail server which has SSL on port 465 and also uses TLS on port 587. I used wireshark to confirm that the RST packets are being spoofed. This is the exact same technology used by “The Great Firewall of China”. I have two t-mobile sims. One is about a year old and doesn’t experience this problem (yet), one is a few weeks old and does.”

He went to say that he had also experienced problems with his OpenVPN connections and would be blogging about the problem (damned bloggers get everywhere) and sure enough, Mike Cardwell did so at grepular.com. That blog post is worth reading because it has an interesting set of comments and responses from Mike appended.

Mike’s post seems to have been picked up by a few others (El Reg has one, and as Mike himself has pointed out, boingboing.net has a particularly OTT post which seems to say that he is accusing t-mobile of something he clearly isn’t.

Finally, two days ago, dpg posted this:

“I’m pleased to report that T-Mobile is no longer blocking TLS-secured email on port 587. As a follow-up to an email exchange over the Christmas period I was contacted today to say that, contrary to what I had been told previously, it was never a deliberate policy to block TLS-secured outgoing email. There was a problem with some equipment after all, which was resolved yesterday.”

I tried again myself today. Initially, I got the same old symptoms (“lost connection after STARTTLS”) then I rebooted my ‘phone and lo and behold I could send email.

Like Mike, I tend to the cockup over conspiracy theory, it’s more likely for one thing. IANAL, but it seems to me that it would be in breach of RIPA part I, Unlawful Interception, for the telco to intercept my SMTP traffic in the way it seems to have been doing. That is not likely to be a deliberate act by a major UK mobile network provider.

But I’ll still keep an eye on things.