Apparently the lastest release of the iPhone OS (v 3.1) has caused a few minor problems with WiFi and battery life. This has led El Reg to moan about the fact that you can’t downgrade the iPhone OS to an earlier version. I’m no great fan of Apple, but to be fair, this situation is not unique to them. Each time I update my PSP to the latest software release, I receive a warning that I cannot revert to the earlier version after upgrade. Not being an iPhone user, I don’t know whether you get a similar warning from Apple before the upgrade or not. But that aside, it does not strike me as unreasonable that Apple should prefer you to keep your OS as current as possible. Software upgrades are generally designed to fix bugs and/or introduce new features. If a particular upgrade has problems, then I would expect the supplier to fix those problems with a new release or a service pack. I would not expect them to recommend that you downgrade.

Permanent link to this article: https://baldric.net/2009/11/29/apple-antipathy-may-be-misplaced/

Nov 15 2009

system monitoring with munin

A while back a friend and colleague of mine introduced me to the server monitoring tool called munin which he had installed on one of the servers he maintains. It looked interesting enough for me to stick it on my “to do” list for my VPSs. Having a bunch of relevant stats presented in graphical form all in one place would be useful. So this weekend I decided to install it on both my mail and web VPS and my tor node.

Munin can be installed in a master/slave configuration where one server acts as the main monitoring station and periodically polls the others for updated stats. This is the setup I chose, and now this server (my web and mail host) acts as the master and my tor node is a slave. Each server in the cluster must be set to run the munin-node monitor (which listens by default on port 4949) to allow munin itself to connect and gather stats for display. The configuration file allows you to restrict connections to specific IP addresses. On the main node I limit this to local loopback whilst on the tor node I allow the master to connect in addition to local loopback. And just to be on the safe side, I reinforced this policy in my iptables rules.

The graphs are drawn using RRDtool, which can be a little heavy on CPU usage, certainly too heavy for the slugs which ruled out my installing the master locally rather than on one of the VPSs. But the impact on my bytemark host looks perfectly acceptable so far.

One of the neatest things about munin is its open architecture. Statistics are all collected via a series of plugins. These plugins can be written in practically any scripting language you care to name. In the plugins which came by default with the standard debian install of munin I found plugins mostly written as shell scripts with the occasional perl script. However, a couple of the additional scripts I installed were written in php and python. The standard set of plugins covers most of what you would expect to monitor on a linux server (cpu, memory i/o, process stats, mail traffic etc). but there were two omissions which were quite important to me. One was for lighttpd, the other for tor. I found suitable candidates on-line pretty quickly though. The tor monitor plugin can be found on the munin exchange site (a repository of third party plugins). I couldn’t find a lighttpd plugin there but eventually picked one up from here (thomas is clearly not a perl fan).

Most plugins (at least those supplied by default in the the debian package) “just work”, but some do need a little extra customisation. For example the “ip_ ” plugin (which monitors network traffic on specified IP addresses) gets its stats from iptables and assumes that you have an entry of the form:

-A INPUT -d 192.168.1.1

-A OUTPUT -s 192.168.1.1

at the top of your iptables config file. You also need to ensure that the “ip_” plugin is correctly named with the suffix formed of the IP address to be monitored (e.g. “ip_” becomes “ip_192.168.1.1”). The simplest way to do this (and certainly the best way if you wish to monitor multiple addresses) is to ensure that the symlink from “/etc/munin/plugins/ip_” to “/usr/share/munin/plugins/ip_” is named correctly. Thus (in directory /etc/munin/plugins):

ln -s /usr/share/munin/plugins/ip_ ip_192.168.1.1

The lighttpd plugin I found also needs a little bit of work before you can see any useful stats. The plugin connects to lighty’s “server status” URL to gather its information. So you need to ensure that you have loaded the mod_status module in your lighty config file and that you have specified the URL correctly (any name will do, it just has to be consistent in both the lighty config and the plugin). It is also worth restricting access to the URL to local loopback if you are not going to access the stats directly from a browser from elsewhere. This sort of entry in your config file should do:

server.modules += ( “mod_status” )

$HTTP[“remoteip”] == “127.0.0.1” {

status.status-url = “/server-status”

}

The tor plugin connects to the tor control port (9051 by default) but this port is normally not configured because it poses a security risk if configured incorrectly. Unless you also specify one of “HashedControlPassword” or “CookieAuthentication”, in the tor config file, then setting this option will cause tor to allow any process on the local host to control it. This is a “bad thing” (TM). If you choose to use the tor plugin, then you should ensure that access to the control port is locked down. The tor plugin assumes that you will use “CookieAuthentication”, but the path to the cookie is set incorrectly for the standard debian install (which sets the tor data directory to /var/lib/tor rather than the standard /etc/tor).

So far it all looks good, but I may add further plugins (or remove less useful ones) as I experiment with munin over the next few weeks.

Permanent link to this article: https://baldric.net/2009/11/15/system-monitoring-with-munin/

Nov 11 2009

OSS shouldn’t frighten the horses

Since I first read that Nokia were adding much needed telephony capability to their N8x0 range of internet tablets I have been watching the development of the new Nokia N900 with much interest. It looks to be potentially the sort of device I would buy. Despite all the hype around the iPhone, I really dislike Apple’s proprietary approach to locking in its customers and I hate even more its use of DRM. So the emergence of a device which uses Linux based software such as Maemo and which is obviously targeted at the iPhone’s market looks to me to be very interesting. But some of the advertising is starting to look scary….

(I still want one though.)

Permanent link to this article: https://baldric.net/2009/11/11/oss-shouldnt-frighten-the-horses/

Nov 01 2009

a free (google) service is worth exactly what you pay for it

I note from a recent register posting that that some gmail users are objecting to the fact that google’s mail service has failed yet again. El Reg even quotes one disgruntled user as saying:

“More than 30 hours without email…totally unacceptable. I’ll definitely have to reconsider my selection of gmail for my primary email account. It may be I have to pay for an account but hell will freeze over before I pay one penny to Google after this debacle.”

Umm, maybe it’s me, but I fail to understand how anyone can complain when a free service stops working. There is a good reason why people pay for services. Paying gives you the option of a contract with an SLA. If the service you are paying for includes storage of your data (as in the corporate data centre model) then your contract should include all the necessary clauses which will ensure that your data is stored securely, is reachable via resilient routes in case of telco failure, is backed up and/or mirrored to a separate site (to which service should fail over automatically in case of loss of the primary) etc. The contract should also ensure that you data remains yours if the hosting company fails, goes out of business or is taken over,

All of that costs money – lots of money in some cases.

Anyone who entrusts their email to a third party provider without ensuring that that they have a decent contractual relationship with that provider (though a paid contract) is, in my view, asking for trouble. Most email users nowadays are heavily dependent upon that medium for communication. I know I would have real difficulty coping without it. Outside of my work environment, I pay for my personal email service. And I am happy to do so. In fact, on some domains I own, I even run my own mail servers (with backups). That costs time and money, but it ensures that my email is available when I expect it to be.

So, google users, stop whining and think again. A proper email service will only cost you a few pounds – and there are plenty of other reasons for not using google’s email service (not least the fact that your email is scanned by google to enable them to target you with their adverts).

Permanent link to this article: https://baldric.net/2009/11/01/a-free-service-is-worth-exactly-what-you-pay-for-it/

Oct 29 2009

call me by name, or call me by value

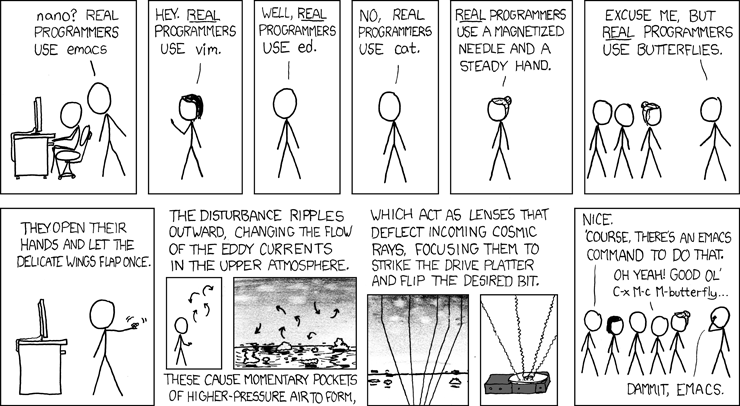

The old saw about “real” programmers versus the rest (known as “quiche eaters”) was originally summarised beautifully in the classic letter to the editor of Datamation in July 1983 entitled “real programmers don’t use pascal”. (See also this link.)

Similar religious (i.e. irrational, but deeply held) positions are taken around various other “lifestyle” choices, such as the equally classic emacs vs vi argument. (For the record I’m in the vi camp – you know it makes sense). So I was delighted to stumble across this from xkcd.

Again, my thanks to xkcd.

Permanent link to this article: https://baldric.net/2009/10/29/call-me-by-name-or-call-me-by-value/

Oct 28 2009

handbags

It would appear that I may have been unnecessarily concerned about the accuracy of the profiling data held on me by the commercial sites I use. In my inbox today I found the following email from Amazon:

“As a valued Amazon.co.uk customer, we thought you might be interested in visiting our website dedicated to shoes and handbags, Javari.co.uk.

Javari.co.uk offers Free One-Day Delivery, Free Returns, and a 100% Price Match Guarantee.

Welcome to Javari.co.uk”

I don’t know whether to feel reassured at Amazon’s failure to understand me or disappointed that the considerable resource they have at their disposal can get me so wrong.

Permanent link to this article: https://baldric.net/2009/10/28/handbags/

Oct 18 2009

logrotate weirdness in debian etch

I have two VPSs, both running debian. One runs lenny, the other runs etch. The older etch install runs fine, and is much as the supplier delivered it. Until now I have not had cause to consider the need to upgrade the etch install to lenny because it “just worked”. But today I noticed for the first time a very odd difference between the two machines. A difference which had me scratching my head, and reading too many man entries, for some long time before I found the answer.

For reasons I don’t need to go into, I log all policy drops in my iptables config to a file called “/var/log/firewall”. This file is (supposedly) rotated weekly. The logrotate and cron entries on both machines are identical.. The entry in “/etc/logrotate.d/firewall” looks like this:

/var/log/firewall {

rotate 6

weekly

mail logger@baldric.net

compress

missingok

notifempty

}

The (standard) file “/etc/logrotate.conf” simply calls the firewall logrotate file out of the included directory “/etc/logrotate.d”. The “/etc/cron.daily/logrotate” file (which calls the logrotate script) is also standard and simply says:

#!/bin/sh

test -x /usr/sbin/logrotate || exit 0

/usr/sbin/logrotate /etc/logrotate.conf

and the (again standard) crontab file says:

# /etc/crontab: system-wide crontab

# Unlike any other crontab you don’t have to run the `crontab’

# command to install the new version when you edit this file

# and files in /etc/cron.d. These files also have username fields,

# that none of the other crontabs do.SHELL=/bin/sh

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin# m h dom mon dow user command

54 * * * * root cd / && run-parts –report /etc/cron.hourly

55 4 * * * root test -x /usr/sbin/anacron || ( cd / && run-parts –report /etc/cron.daily )

36 5 * * 7 root test -x /usr/sbin/anacron || ( cd / && run-parts –report /etc/cron.weekly )

3 3 5 * * root test -x /usr/sbin/anacron || ( cd / && run-parts –report /etc/cron.monthly )

#

So far so simple, and you would expect the file “/var/log/firewall” to be rotated once a week at 04.55 on a sunday morning. Wouldn’t you.

Well, on lenny, you’d be right. But on the etch machine the file was rotated daily at a time completely unrelated to the crontab entry. It turns out that there is a bug in the way etch handles logrotation because syslog doesn’t use logrotate and overrides the logrotation entries run out of cron. I found this after much searching (and swearing).

See the bug report at bugs.debian.org and this entry which pointed me there.

I love standards.

Permanent link to this article: https://baldric.net/2009/10/18/logrotate-weirdness-in-debian-etch/

Oct 15 2009

where has my money gone

Like most ‘net users I know these days, I conduct most of my financial transactions on-line. But on-line banking is a high risk activity, particularly if you use the “default” OS and browser combination to be found on most PCs. I don’t, but that doesn’t make me invulnerable, just a slightly harder target. So attempts by the banks to make it harder for the bad guys to filch my money are welcome. Many banks seem to be taking the two factor authentication route by supplying their customers with a hardware token of some kind to be used in conjunction with the traditional UID/password.

I have just logged on to my bank to be greeted with a message that they will shortly be introducing a one-time password system. Apparently this system requires me to register my mobile phone number with the bank. Thereafter, for certain “high risk” transactions (such as setting up a new payment to an external account) in addition to requiring my normal UID/password, the bank will send a one-time password to my moblle which I will have to play back to the bank via the web site before the transaction will be authorised. Sounds reasonable? Maybe. Maybe not. I can see some flaws – not least the obvious one that I have to have a mobile phone (probably not an unreasonable assumption) and that I have to be prepared to register that with the bank (slightly less reasonable). But my biggest concern is that this approach fails to take account of the fact that “people do dumb things” (TM).

The bank’s FAQ about the new system says:

“We have decided to use your mobile phone for extra security so there is no need to carry around a card reader to use e-banking. This also provides extra security as it is unlikely a fraudster will be able to capture both your internet log on credentials as well as your mobile phone.”

I have a problem with that assumption. I know a lot of otherwise very smart people who use their “smart” phones as a central repository of a huge amount of difficult to remember personal information. These days it is very rare for anyone to actually even remember a friend’s ‘phone number. Why bother – just scroll down to “john at work” and press call. These same people store names, addresses, birthday reminders, and yes, passwords for the umpteen web services they use, on the same device. That ‘phone may even be used to log on to the website that requires the password. Indeed, it is entirely plausible that many people will use their ‘phone to log on to their bank when out and about simply to make exactly the kind of transaction my bank deems “high risk”, i.e. to transfer funds from one acccount to another so that they can make a cash withdrawal from an ATM without incurring charges.

How many mobiles are lost or stolen every day?

Permanent link to this article: https://baldric.net/2009/10/15/on-line-banking-security/

Oct 03 2009

debian on a DNS-313

I bought another new toy last week – a D-Link DNS 313 NAS.

Actually, this was a mistake because what I really wanted was the DNS-323. I just wasn’t careful enough at the time. Quite apart from having space for two 3.5″ SATA hard drives instead of just one, the 323 is a very different beast to its smaller (and much cheaper) sibling.

Martin Michlmayr has a nice guide to installing debian on a 323. Given that the 323 has a faster processor and more RAM than a slug and it can take two internal SATA disks rather than just the external USBs it looks like an attractive option for a new debian based server. Pity then that I bought the wrong one. My excuse is that I thought the only difference was in the disk capacity and I was prepared to settle for just 1TB of store. The (normally reliable) owner of the shop where I bought the beast was also adamant that the disk capacity was the only difference between the two devices. I should have known better than to succumb to what was essentially an impulse buy when I wasn’t really intending to buy a new NAS at the time (I was in the shop for something else and picked up the 313 because I recalled reading Martin’s pages recently).

Once I got the 313 home of course and checked the specs it looked as if I would be stuck with the D-Link supplied OS. However, a bit of searching turned up the DSM-G600, DNS-323 and TS-I300 Hack Forum which has a series of articles on installing debian on a 313 (despite the forum title). A forum contributor called “CharminBaer” has put together a nice tarball of debian lenny which allows the user to replace the D-Link OS without actually reflashing the device. This means that the original bootloader is retained but the device boots into the replacement system on disk. The nicest part of this installation method is that there is almost no risk of bricking the device because the installation simply entails copying the tarball onto the disk over a USB connection, extracting the files and then booting into a shiny new Lenny install. Result.

The tarball can be found here, and the installation instructions are here.

Many thanks to “CharminBaer”.

Permanent link to this article: https://baldric.net/2009/10/03/debian-on-a-dns-313/

Oct 03 2009

debonaras demise?

Sadly it seems that the debonaras wiki is no more. Rod Whitby had done some excellent work in pulling together a site which consolidated useful information about low cost network storage devices (alternatives to the slug) which could be made to run Debian. Unfortunately the site was a continual target for wiki spam bots and malcontents and it obviously became high maintenance. About a year ago I corresponded with Rod about the spam activity after he introduced password controlled access to some of the main target pages. I even assisted in moderation and maintenance of the site for a while when it became clear that Rod was getting fed up of the work load for no obvious benefit. It now looks as if he has decided to let the domain lapse after all.

Permanent link to this article: https://baldric.net/2009/10/03/292/

Sep 28 2009

abigail’s party

In today’s Guardian, Charlie Brooker ranted rather eloquently about how much he hated smug Apple fans. Or did he?

Actually he made full on broadside swipes at both Apple and Microsoft’s approach to product marketing. One side is too slick and irritating, the other is way too uncool and irritating. But most of his ranting was aimed straight at the Microsoft Windows 7 Launch Party ads on Youtube.

These ads are mind numbingly painful to watch. Take a look at this:

Now take a look at the Cabel remix and read the comments posted. Apparently there is a large contingent of people out there who seriously believe that Microsoft deliberately made the videos such that people would blog about them saying how bad they were.

No way. Couldn’t possibly happen.

Permanent link to this article: https://baldric.net/2009/09/28/abigails-party/

Sep 20 2009

wordpress security

At about the time I decided to move trivia to my own VPS, there was a lot of fuss about a new worm which was reportedly exploiting a vulnerability in all versions <= 2.8.3. Even the Grauniad carried some (rather inaccurate) breathless reporting about how the wordpress world was about to end and maybe we should all move to a rival product. Kevin Anderson said on the technology page of 9 September:

“.. the anxiety that this attack – one of a number in the past year against WordPress – has engendered may create enough concern for someone to spot the chance to create a rival product.”

Rubbish. Besides the fact that there are already several rivals to wordpress (blogger, typepad and livejournal in the hosted services domain alone, plus others such as textpattern if you wish to host your own) what Anderson apparently fails to realise is that all software contains bugs, and any software which is exposed to as hostile an environment as the internet is going to have problems. Live with it. Sure it would be good if we could find and fix all vulerabilities before they are exploited, but as far as I am aware, that hasn’t happened for any other piece of code more complex than “printf (“hello world\n);” (and even that could have problems). Why expect wordpress to be any different?

Amongst all the brouhaha I did find one site which offered some commentary and advice I could agree with, take a look at David Coveney’s “common sense” post of 6 September.

Permanent link to this article: https://baldric.net/2009/09/20/wordpress-security/

Sep 12 2009

wordpress on lighttpd

I have commented in the past how I prefer lighttpd to apache, particularly on low powered machines such as the slug. I used to be a big apache fan, in fact I think I first used it at version 1.3.0 or maybe 1.3.1, having migrated from NCSA 1.5.1 (and before that Cern 3.0) back in the day when I ran web servers for a living. However, those days are long gone and my web server requirements are now limited to my home network and VPSs so I don’t need, nor do I want, the power of an industrial strength apache installation. In fact, my primary home web server platform (the slugs) struggles with a standard apache install. Lighttpd works very well on machines which are low on memory.

Having got used to lighttpd, it seemed a natural platform to use on my VPSs. And it performs very well on those machines for the kind of traffic I see. Moving trivia to my bytemark VPS meant that I had to take care of some minor configuration issues myself – most notably the form of permalinks I use. Most of the documentation about running your own wordpress blog assumes that you will be using apache (since that is the most popular web server software provided by shell account providers). For those of you who, like me, want to use lighttp instead, the configuration details from my vhosts config file are below. Lighttpd is remarkably easy to configure for both virtual hosting in general, and for wordpress in particular. Note that I also choose to restrict access to wp-admin to my home IP address, this helps to keep the bad guys out.

Extract of “conf-enabled/10-simple-vhost.conf” file:

# redirect www. to domain (assumes that “mod_redirect” is set in server.modules in lighttpd.conf)

$HTTP[“host”] =~ “www.baldric.net” {

url.redirect = ( “.*” => “..”)

}#

# config for the blog

#

$HTTP[“host”] == “baldric.net” {

# turn off dir listing (you can do this globally of course, but I choose not to.)

server.dir-listing = “disable”

#

# do the rewrite for permalinks (it really is that simple)

#

server.error-handler-404 = “/index.php”

#

# reserve accesss to wp-admin directory and wp-login to our ip address

#

$HTTP[“remoteip”] !~ “123.123.123.123” {

$HTTP[“url”] =~ “^/wp-admin/” {

url.access-deny =(“”)

}

$HTTP[“url”] =~ “^/wp-login.php” {

url.access-deny =(“”)

}

}}

# end

Enjoy.

Permanent link to this article: https://baldric.net/2009/09/12/wordpress-on-lighttpd/

Sep 09 2009

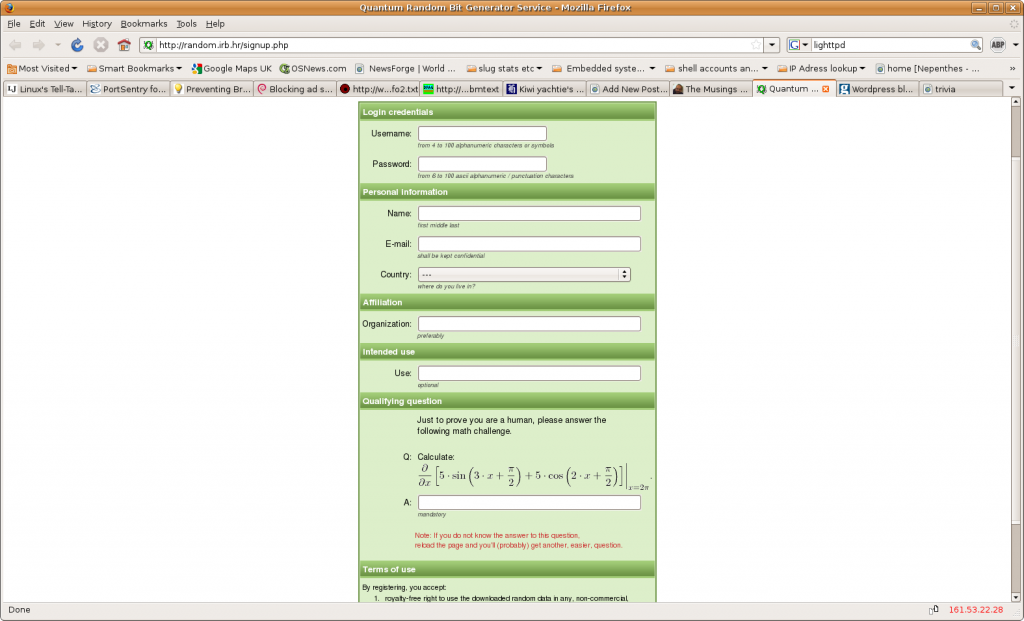

are you human enough

In the course of moving trivia to its new home, I necessarily reviewed and edited a bunch of links. This meant I revisited some old friends – including Chris Samuel’s blog where I discovered this gem.

As Chris says, you’ve got to love the captcha.

Permanent link to this article: https://baldric.net/2009/09/09/are-you-human-enough/

Sep 09 2009

we’ve moved

As I mentioned in the last post. I decided to move trivia from its old home on a shared hosting platform to my own VPS at bytemark. I also mentioned that this was proving trickier than it should – for no real good reason. However, the move is now complete and the blog is now completely under my control on my own VPS. So if anything goes wrong, I have only myself to blame.

So why did it take so long? Apart from the fact that I went on holiday immediately after the last post, the main reasons are twofold; firstly the difference in versions between that on my old host (2.2.1) and the current release (2.8.4) were sufficiently great to make the upgrade process trickier than it need have been; but secondly, and more importantly, my old provider’s DNS management process was less than helpful.

Before committing to the move, I naturally tested the installation and migration first on my new platform. This raised the problem of how I could install as “baldric.net” without clashing with the existing blog (I didn’t want to install under a different domain name for fairly obvious reasons). Changing my local DNS settings to point the domain name at my new IP address solved this problem (changing /etc/hosts would also have worked) but that meant that I could not have both old and new blogs on screen for comparison at the same time. Irritating, but not ultimately an insuperable problem. In moving to 2.8.4 I discovered that none of my (blogroll) links migrated properly and I had to recreate them all by hand. This took rather longer than I had anticipated, but it proved a useful exercise because I found some broken links in the process. They are currently still broken but at least I know that and I’ll fix them shortly. Because I use lighttpd and not the more usual apache I also had to address the problem of getting permalinks to work properly, but that didn’t prove too difficult – I’ll cover that in a separate post about wordpress on lighttpd.

Having got the new installation up and running to my satisfaction, I now wanted to point my domain name at the new blog. This is where I ran into some oddities in the way 1and1 set up their blog hosting and domain management. Ordinarily it is pretty easy to switch the A record for a 1and1 hosted domain (I have several) from the default to a new address. Not so if you have a blog hosted on that domain – the domain becomes “unmodifiable”. Technical support were initially not particularly helpful since they didn’t seem to understand my problem (and there were worrying echoes of my experiences with BT “support”). But this simply reaffirmed my belief that I was better off controlling my own destiny in future.

Eventually I was told that the only way I could unlock the domain to allow me to point to a new A record was to a) move the blog to a new domain (tricky if you don’t have one, and a pretty dumb idea anyway) or b) delete the blog (an even dumber idea if. like me, you are cautious enough to want to test the transition before committing). Eventually I decided to move the blog to a spare domain. I’ll delete it in the next week or so. Meanwhile, if you find an apparent duplicate of trivia on a completely different domain, you know why.

Permanent link to this article: https://baldric.net/2009/09/09/weve-moved/

Aug 26 2009

wordpress woes

As is common with many blogs, my public ramblings on this site are made possible through the ease of use and flexibility of the mysql/php based software known as wordpress.. And again, as is common to much php/mysql based software, that package has vulnerabilities – sometimes serious, remotely exploitable vulnerabilities. When vulnerabilities are made public, and a patch to correct the problem becomes available, the correct response is to apply the patch, and quickly. In the world of mission critical software, or even in the world where your business or reputation depends upon correctly functional, dependable, and “secure” (for some arguable definition of secure) software it is absolutely essential that you patch to correct known faults. If you don’t, and as a result you get bitten, then your business or reputation, or both will suffer accordingly.

Yet again, as is common, I have to date used the services offered by a third party to host my blog rather than go to the trouble of managing my own installation. Many bloggers simply sign up to one of the services such as is offered by wordpress itself on the related wordpress.com site. Such sites tend to give you a blog presence of the form “yourblogname.wordpress.com” or “myblogname.blogger.com” etc. Other, usually paid for, service providers such as the one I use offer a blog with your own domain name. Whatever service you choose though, you are inevitably reliant on the service provider to ensure that the software used to host your site is patched and up to date. My own provider uses a template based approach to its blog service, This limits me (and others) to the functionality they choose to provide. In return, I expect them to ensure that the version of software they provide and support is as secure as is reasonably possible to expect for the sum I pay each month.

A couple of recent events have caused me to question this arrangement though and I am now in the process of moving this blog to one of my own servers. Firstly, wordpress itself has recently suffered from a particularly embarrassing remote exploit which allows an attacker to reset the admin password, and secondly, as I discussed at zf05 below, the servers belonging to some supposedly security conscious individuals were compromised largely because poor patch management practices (amongst other things) left them exposed.

Time to rethink my posture.

I currently have three separate VPSs with different providers and I figure it is time I took responsibility for my own configuration management rather than relying on my current provider (which, incidentally, hasn’t updated its wordpress version for some long time despite both this current and many earlier security updates being released). However, for a variety of “interesting” and ultimately annoying reasons, this is proving to be trickier than it should be.

I’ll post an update when I have made the transition. Meanwhile, I hope not to see any break in service – unlike the self-inflicted cock-up in transfer of one of my domains.

Watch this space.

Permanent link to this article: https://baldric.net/2009/08/26/wordpress-woes/

Aug 02 2009

zf05

I really missed the old phrack magazine. Some of the “loopback” entries in particular are superb examples of technical nous, complete irreverance and deadpan humour. One of my favourites (from phrack 55) appears in my blogroll under “network (in)security”. I am particularly fond of the observation that details of how to exploit old vulnerabilities are “[ As useless as 1950’s porn. ] “. As I said, sorely missed (but now, with issue 66 back in action after over a year since the last release).

It would seem, however, that I have been missing a new kid on the block who follows in phrack’s footsteps. A group called zf0 (zero for owned) appears to publish a ‘zine in the mold of the phrack of old. And their latest release, zf05.txt has been causing something of a stir because it relates details of the compromise of systems owned and/or managed by some high profile and well known personages such as Dan Kaminsky and Kevin Mitnick.

The ‘zine bears reading. The style is unmistakably “underground” and “down with the kids” and it is (unnecessarily in my view) filled with unix-geek listings of bash history files and such like, but its authors still manage to make the sort of pertinent comments that I so loved in phrack.

“It’s the simple stuff that works now, and will continue to work years into the future. Not only is it way easier to dev for simple mistakes, but they are easier to find and are more plentiful.”

How well patched are you?

Permanent link to this article: https://baldric.net/2009/08/02/zf05/

Aug 02 2009

dns failure – a cautionary tale

I recently moved one of my domains between two registrars. It seemed like a good idea at the time, but on reflection it was both foolish and unnecessary. Unnecessary because my main requirement for moving it (greater control of my DNS records for that domain) could have been met simply by my redelegating the NS records from my old registrar’s servers to the nameservers run by my new provider; foolish because it lost me control over, and usage of, that domain for eleven (yes eleven) days. This particular domain happens to host the mailserver (and MX record) for a bunch of my other domains. So the loss of that domain meant that I also lost email functionality on a bunch of other domains as well as the primary domain in question. Not good. Had I been running a business webserver on that domain, or been completely reliant on the mail from that smtp host I could have been in deep trouble. As it was, I was simply hugely inconvenienced (neither of my two main domains were affected because I kept the mail for those domains pointed at a different mailserver).

So what happened?

My new provider offers greater granularity of control over DNS records than my main registrar. Moving my DNS to them would give me complete control rather than being limited to creation of a restricted number of subdomains and new MX records. I like control. What I didn’t think through carefully enough was whether I (a) really needed that additional control and (b) really needed to actually change registrar to gain that control. As it turns out, the answer to both those questions is no – but hey, we all make mistakes.

Anyway, having convinced myself that I actually did need to move my domain to the new registrar, the following series of events lost me the domain for those eleven days.

Firstly I tried to use my new registrar’s control panel to inititate the transfer. This failed – for some technical reason which the registrar identified and fixed later. This alone should have forewarned me of impending difficulty, but no, I pressed ahead when the tech support team offered to initiate the transfer manually. I accepted,

Secondly, I created the necessary new DNS records on the new registrar’s DNS servers ready for the transfer. Naively, I believed that once the old registrar surrendered control, my new registrar’s servers would be shown as authoritative and I would have control. I also believed (again naively and incorrectly as it happens) that my old registrar would maintain its view of my domain until the delegation had switched.

Thirdly, I used my old registrar’s control panel to initiate cancellation of registration at their end and transfer to my new registrar. This is where things started to go seriously wrong. As soon as my old registrar had confirmed cancellation at their end, they effectively switched off the DNS for that domain. Presumably this is because they were no longer contractually responsible for its maintenance. But the whois records continued to show that their nameservers were authoritative for my domain for the next six days whilst the transfer was taking place. I confess to being completely bemused as to why it should take so long for this to happen, but I put that in the same category of mystery as to what happens to my money in the time I transfer sums electronically between two bank accounts – slow electrons I guess.

So now the old registrar is shown as authoritative but doesn’t answer. The new registrar has the correct records but can’t answer because it is not authoritative.

Eventually my new registrar is shown in the whois record as the correct sponsor, but the NS records of my old registrar are still shown as authoritative. Here it gets worse. The control panel for my new registrar is still broken and I have no way of changing the NS records to point to the correct servers. So I email support. And email support. And email support. Eventually I get a (deeply apologetic) response from support which says that they were so busy fixing the problem highlighted by the failure uncovered in their automatic process that they “forgot” to keep me (the customer) informed.

Now, whilst neither company concerned covered themselves in glory during this process, on reflection I am reluctant to beat them up too much because I have come to the conclusion that, technical failure aside, much of the trouble could have been avoided if I had thought carefully about what it was I was trying to achieve, and had read and carefully considered the documentation on both company’s sites before starting the transfer. Documentation about registrant transfer is fairly clear in its warning that the process can take about five or six days. It is also not unreasonable that a company losing contracted responsibility for DNS maintenance should cease to answer queries about that domain (after all, they could be wrong…) OK – the new registrar failed big time in its customer care, but they did apologise profusely and (so far) they haven’t actually charged me anything for the transfer.

What I should have done before starting the transfer was to redelegate authority for the domain from the old registrar’s nameservers to my new registrar’s servers. That way I would not have had the long break in service. In fact, if I had thought about it carefully, I could have simply left it at that and not started the transfer of registrar at all. After all, once authority was redelegated, I would have complete control on my new servers.

Lesson? Once again, read the documentation. And think. I really ought to know better at my age.

Permanent link to this article: https://baldric.net/2009/08/02/dns-failure-a-cautionary-tale/

Jul 12 2009

zebu update

Well google was clearly the fastest at indexing my blog. It only took a day for my nonsense phrase to appear as number one on their index. Since then, the phrase has appeared in ask, bing, clusty and yahoo, but still not in cuil, despite being visited by their robot on 29 June.

Obvious (and very obvious) conclusion – use google if you want to find absolute rubbish very, very quickly.

Permanent link to this article: https://baldric.net/2009/07/12/zebu-update/

Jul 05 2009

aspire one bios updates

This post is partly for my own benefit. It records some of the most useful references to bios updates for the AAO. My own AAO is actually running a fairly early bios (3114) and deliberately so. I upgraded to 3309 in (yet another futile) attempt to get sony memory sticks to work but found that screen brightness suffered for no perceptible gain in any other functionality. So I reverted. That aside, the references here may be useful to others.

Firstly, there is considerable discussion of the relative merits of various bios versions on the Aspire One User Forum. That site also has a lot of other useful (and sometimes not so useful) discussion points. Broadly, though, I have found that forum a good starting point for searching for AAO related information.

Next up is the very useful blog site run by Macles. Macles gave a very good set of instructions on flashing the bios in this post, which has been added to. and improved with the addition of lots of step by step pictures, by “flung” at netbooktech in this posting. Note however that the link to the acerpanam site supposedly hosting the bios images is broken. The images, along with many other driver downloads can currently be found by following links on the gd.panam.acer.com pages. Alternatively, I have found the images on the Acer Europe ftp site a good source (though it has yet to show the 3310 release).

No – my sony memory sticks still don’t work.

Permanent link to this article: https://baldric.net/2009/07/05/aspire-one-bios-updates/

Jul 05 2009

tor on a vps

I value my privacy – and I dislike the increasing tendency of every commercial website under the sun to attempt to track and/or profile me. Yes, I know all the arguments in favour of advertising, and well targeted advertising at that, but I get tired of the Amazon style approach which assumes that just because I once bought a book about subject X, I would also like another book about almost the same subject. I don’t much like commercial organisations profiling me (and, incidentally, I find it highly ironic that we in the UK seem to make a much bigger fuss about potential “big brother” Government than we do about commercial data aggregation, but hey).

Sure, I routinely bin cookies, block adware and irritating pop up scripts, and use all the, now almost essential, firefox privacy plugins, but even there we still have a problem. I don’t know who wrote those plugins, I just have to trust them. That worries me. Some of the best known search engines are even more scary if you think carefully about the aggregate information they have about you.

Sometimes I care about the footprint I leave, sometimes I don’t, but the point is that I should be in control of that footprint. Increasingly that is becoming difficult. Besides being tracked by sites I visit, last year’s controversy about BT’s use of phorm is also worrying. If my ISP can track everything I do, then I face another level of difficulty in protecting my fast vanishing privacy.

Besides using a locked down browser, DNS filtering which blocks adware, cutting cookies and all the other tedious precautions I now feel are necessary to make me feel comfortable, I often use anonymous proxies when I don’t want the end site to know where I came from. But even that now looks problematic. If you use a single anonymising proxy, all you are doing is shifting the knowledge about your browsing from the end site to an interrmediary. That intermediary may (indeed should) have a very strict security policy. Ideally, it should log absolutely nothing about transit traffic. But if that intermediary does log traffic data and then sells that data to a third party, you may be in an even worse position than if you had not attempted to become anonymous. Back in january of this year, Hal Roberts of Harvard University, posted a blog item about GIFC selling user data. If sites such as Dynaweb are prepared to sell user data, then the future for true anonymity looks problematic. As Doc Searle said in this blog posting,

We live in a time when personalized advertising is legitimized on the supply side. (It has no demand side, other than the media who get paid to place it.) Worse, there’s a kind of gold rush going on. Even in a crapped economy, a torrent of money is flowing into online advertising of all kinds, including the “personalized” sort. No surprise that companies in the business of fighting great evils rationalize the committing of lesser ones. I’m sure they do it it the usual way: It’s just advertsing! And it’s personalized, so it’s good for you!

No, as Searle well knows, it is not good for you.

What to do? Enter tor and privoxy.

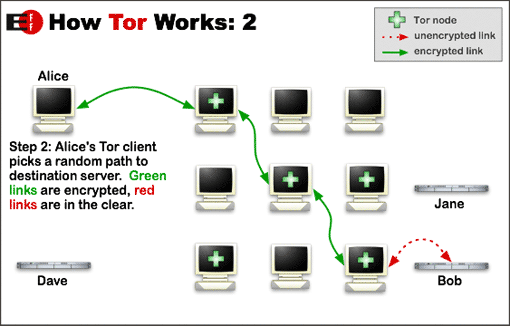

I first used tor some years ago in its earlier incarnation as “the onion router” (hence its name) and until recently had used it only sporadically since. The main drawback of the early tor network was its speed, or lack of it. Tor gets is strength (anonymity) from the way it routes traffic.

Tor traffic passes through a series of nodes before exiting at a node which cannot be linked back to the original source. So tor performance depends on a large number of both fast intermediate relays and a large number of exit nodes. Since not all tor users are prepared to run relays, let alone an exit node (it can be bandwidth expensive and in the case of an exit node can lead to your system being mistaken for a hostile, or compromised, site) tor can be slow, at times painfully slow. But recently tor has been getting faster as more relay and exit nodes are added. It is now at a state which is probably usable most of the time, so long as you are prepared to wait a little longer than is customary for some web pages to load (and you don’t use youtube…..).

When using tor recently I have tended to follow the well trodden path of local installation alongside privoxy. Because I believe in giving something back to the community if I am gaining benefit, I also set my local configuration to run as a relay. But that caused some difficulty. If we assume that my tor usage was fairly representative of the majority of tor users out there, then the fact that my relay was only operational when my client system was up and running meant that the relay would be seen by the tor network as unstable and probably slow, Indeed, the fact that I had to throttle tor usage to the minimum to stop the network from impacting unduly on my ADSL bandwidth, meant that I was not entirely happy with the setup. So I stopped relaying. But that leaves me feeling that I am taking advantage of a free good when I could be contributing to that good.

Some while back I bought myself a VPS from Bytemark (an excellent, technically savvy, UK based hosting company) to run a couple of webs and an MTA. I use it now largely as a mail server (running postfix and dovecot) and the traffic is relatively low volume. That VPS is pretty small (though actually way better specced than some real servers I have run in the past) but I reasoned that I could easily run a tor relay on that machine and then connect to it remotely from my client system. I did, and it worked fine. But I soon found that the tor network seems to have a voracious appettite for bandwidth, Even with a fairly strict exit policy (no torrents allowed!) and some tight bandwidth shaping, I still found that I was using about 2 Gig of traffic per day (vnstat is useful here). Any more than that would start to encroach on my bandwidth allowance for my VPS and possibly impact on the main business use of that server. Monthly rates for VPSs are now less than I pay for my mobile phone contract (and arguably more useful than a phone contract too) so I decided to specialise and buy another VPS just for tor. I now run an exit node on a VPS with 384 MB of RAM and 150 Gig monthly traffic allowance. That server is currently throttled to about 2 Gig of traffic per day, but I will double that very shortly.

Now one of the nicest things about running a tor relay is the fact that your own tor usage is masked and you may get better anonymity. I therefore run privoxy on my tor relay and proxy through from my client to that proxy which in turn chains to tor internally on my relay. However, if you simply configure your local client to proxy through to your relay in clear you are allowing your ISP (and anyone else who cares to look) to see your tor requests – not smart. So I tunnel my requests to the tor relay through ssh. My local client has an ssh listener which tunnels tor requests through to the relay and connects to privoxy on port 8118 bound to localhost on the relay. I also have a separate browser on my desktop which has as its proxy the ssh listener on my client system. For a good description of how to do this see tyranix’s howto on the tor wiki site. Now whenever I want to use tor myself I simply switch browser (and that browser is particularly dumb and stripped, and has no plugins or toolbars which could leak information). Of course, should I get really paranoid, I could always run the local browser in a VM on my desktop and reload the VM after each session.

But I’m not that paranoid.

Permanent link to this article: https://baldric.net/2009/07/05/tor-on-a-vps/

Jun 28 2009

wild xenomanic yiddish zebu

This post is an experiment. I have noticed from my logs that several different search engines index this blog. Ironically, the indexing has sometimes given me a little trouble when I have been searching for topics of interest to me (such as fixing the sony memory stick problem on my AAO) and my search returns my own blog as one of the answers.

So I thought I’d try to find out how quickly I could get a uniquely high rank in a range of search engines. Hence the meaningless phrase above, I shall try searching for that exact string across a range of engines over the next few weeks. At the time of posting I cannot find any page containing this phrase.

Permanent link to this article: https://baldric.net/2009/06/28/wild-xenomanic-yiddish-zebu/

May 12 2009

jaunty netbook remix DVD iso

My daughter saw my netbook the other day and decided that she wanted UNR on her Tosh laptop to replace the 8.04 hardy I had installed for her (no-one in my family is allowed a proprietary OS – this occasionally causes some friction).

Anyway, the old Tosh she uses (which has seen various distros during its life) initially presented me with something of a challenge when she asked for UNR – it cannot boot from a USB stick. I couldn’t find an iso image of jaunty-unr so I decided to see if I could build one myself. It turned out to be quite easy. The USB stick image contains all you need to make an iso using mkisofs.

Here’s how:

– mount the USB image;

– copy the entire image structure to a new directory (call it /home/user/temp or whatever, just be sure to copy the entire structure including the hidden .disk directory);

– cd to the new directory and rename the file “syslinux/syslinux.cfg” “isolinux/isolinux.cfg”;

– rename the directory “syslinux” “isolinux”;

Now build an iso image with mkisofs thusly:

mkisofs -J -joliet-long -r -b isolinux/isolinux.bin -c isolinux/boot.cat -no-emul-boot -boot-load-size 4 -boot-info-table -o /home/user/outputdirectory/jaunty-unr.iso .

(where /home/user/outputdirectory is your chosen directory for building the new iso called jaunty-unr.iso)

Now simply burn the iso to DVD.

Permanent link to this article: https://baldric.net/2009/05/12/jaunty-netbook-remix-dvd-iso/

Apr 12 2009

acer aspire one – a netbook experience

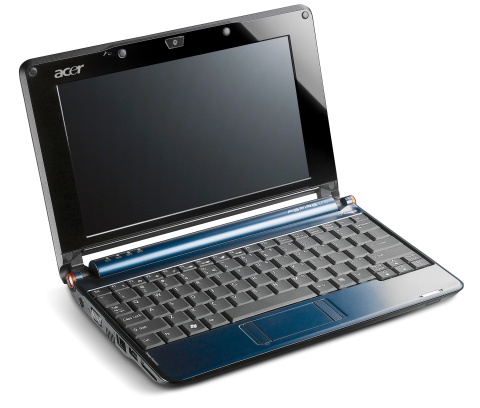

I mentioned in an earlier post that I had recently acquired an Acer Aspire One (AAO) netbook. I chose the AAO in preference to any other of the netbooks on the market for two reasons – firstly it looks a lot cooler than most of the competition (particularly in blue – see below), but secondly, and most importantly, the price was excellent. Apparently many people are buying the AAO with Linpus installed only to find that the machine is not compatible with Microsoft software – either that, or they are just not comfortable enough with an unfamiliar user interface to persevere. This is a shame. Linpus seems to have gained a reputation as “Fisher Price Computing” or “My First Computer”. In my view this is unfair. It does exactly what it says on the tin. The default Linpus install boots quickly, connects with both wired and wireless networks with ease and provides all that most users could want from a netbook – i.e. web browsing, email, chat and word processing.

Whatever the reasons for the returns, this means that there are a fair number of perfectly good AAOs coming back to Acer. Acer in turn is punting those machines back on to the market through various resellers as “refurbished”. Whilst I may be disappointed at the lack of engagement of the buying public with a perfectly usable linux based netbook, from a purely selfish viewpoint this means that I got hold of an excellent machine at well below the original market price. My machine is the AOA 150-Ab ZG5 model. This has the 1.6 GHz N270 atom processor, 1 GB DDR2 RAM and a 120 GB fixed disk. Not so very long ago a machine with that sort of specification (processor notwithstanding) would have been priced at close to £500. I got mine for under £190 including delivery. An astounding bargain.

To be frank, I didn’t really need a netbook, but I’m a gadget freak and I couldn’t resist the added functionality offered by the AAO over my Nokia N800 internet tablet. The addition of a useable keyboard, 120 Gig of storage, and a decent screen in a package weighing just over a kilo means that I can carry the AAO in circumstances where I wouldn’t bother with a conventional laptop. And whilst the N800 is really useful for casual browsing, the screen is too small for comfort, and ironically, I stll prefer my PSP for watching movies on the move. So the N800 hasn’t had the usage I expected.

There are plenty of reviews of the AAO out there already, so I won’t add much here. This post is mainly about my experience in changing the default linux install for one I find more useful. As I said above, Linpus is perfectly usable, but the configuration built for the AAO is aimed at the casual user wth no previous linux experience. Most linux users dump Linpus and install their preferred distro. Indeed, there is a very active community out there discussing (in typical reserved fashion) the pros and cons of a wide variety of distros. It is relatively simple to “unlock” the default Linpus installation to gain access to the full functionality offered by that distribution, but Linpus is based on a (fairly old) version of Fedora and I much prefer the debian way of doing things. So for me, it was simply a choice between debian itself, or one of the ubuntu based distros.

I run debian on my servers (and slugs) but ubuntu on my desktops and laptops so ubuntu seemed to be the obvious way to go. Some quick research led me to the debian AAO wiki which gives some excellent advice which is applicable to all debian (and hence ubuntu) based installations. Whilst this wiki is typically thorough in the debian way, it does make installation look difficult and the sections on memory stick usage, audio and the touchpad are not encouraging for the faint hearted. I was particularly disappointed at the advice to blacklist the memory stick modules because I actually want to use sony memory sticks (remember my PSP….).

The best resource I found, and the one that I eventually relied upon for a couple of different installations was the ubuntu community page. This page is being actively updated and now offers probably the best set of advice for anyone wishing to install an ubuntu derivative on any of the AAO models.

So, having played with Linpus for all of about two days, I dumped it in favour of xubuntu 8.10. I chose that distro because it uses the xfce window manager which is satisfactorily lightweight and fast on small machines (and because the default theme is blue and looks really cool on a blue AAO – see my screenshot below).

By following the advice on the ubuntu community help page for 8.10 installs, I managed to get a reasonably fast, functional and attractive desktop which made the most of the (admittedly cramped) AAO screen layout. I had some trouble with the default ath_pci wireless module (as is documented) so I opted for the madwifi drivers which worked perfectly. The only functions I failed to get working successfully remained the sony memory sticks in the RHS card reader (SD cards worked fine) and the internal microphone.

Further searching led me to the kuki linux site which gives as its objective “a fully working, out of the box replacement for Linpus on the AAO”. I like the objective, but not the distro. However, that distro uses a kernel compiled by “sickboy” which promised to offer full functionality. I tried that kernel with my xubuntu installation (with madwifi wireless drivers) and indeed everything worked – except the sony memory sticks. So I decided to see what else I could do.

By now, I had been using the xubuntu installation for about three or four weeks. However, a fresh visit to the ubuntu community site led me to consider testing jaunty in a “netbook remix” form. I had earlier dismissed this option at intrepid (8.10) because it looked too flakey and seemed like an afterthought rather than a well considered desktop build. I was pleasantly surprised at the look of the “live” installation of the beta of jaunty-nr so decided to give that a go for real. Jaunty comes with kernel 2.6.28.11 which is pretty much up to date and I guessed that playing with that might give me the complete distro I wanted. I was also quite taken with the desktop itself which makes the most of the limited AAO screen real estate by dispensing with traditional gnome panels and virtual desktops and offering a “tabbed” application layout akin to that used in maemo on the Nokia N800. So, following the instructions on the UNR wiki, I downloaded the latest daily snapshot from the cd-image site, and made a new USB install stick. (Note that it is worth using decent branded USB sticks here, I had no problem with a 4 Gig Kingston stick, but an unbranded freebie I tried was useless both here and in my earlier installations.) My newly installed desktop looked as below.

(Not blue, so not quite so cool, but hey.)

Note that the top panel only shows some limited information (battery and wireless connection status, time/date etc) whilst the left side of the screen shows menu options which would traditionally be given as drop down options from a toolbar and the right hand side of the screen is taken up by what would normally be called “places” (directories etc) on a standard ubuntu desktop. The centre of the screen gives the icons for the applications in the currently highlighted left side menu option. The overall effect is quite attractive and very easy to read. Selecting any application opens that application in full screen mode. Opening several applications leaves you with the latest application at the front and the others available as icons on the top panel. See my desktop below with firefox open as an example.

The completed installation with the default kernel does not allow for pci hotplugging and the RHS card reader doesn’t work. This is a retrograde step, but conversely, wireless worked properly with the atheros ath5k module (not the ath_pci module) in my snapshot. Earlier snaphots included a kernel in which the acer_wmi module had to be blacklisted because it conflicted with the ath5k wireless module. The fix for the pci hotplug problem involved passing “pciehp.pciehp_force=1” as an option to the kernel at boot time. Whilst this fixed the RHS card reader failure, I still couldn’t get the damned reader to recognise my memory sticks.

So having found a distro I really like and can probably live with long term on my AAO, I need to address the remaining problems. Given the range of problems I was facing whichever distro I chose, I decided to bite the bullet and compile my own kernel. It was clear to me that the main problems with wireless were conflicting modules so it seemed obviously better to build a kernel with only the modules required rather than include modules which only had to be blacklisted. Similarly, the requirement to pass boot time parameters to the kernel meant that pci hotplug support and related code wasn’t modularised properly. It is pretty difficult to load and unload kernel modules which don’t exist.

It is some time since I last built a linux kernel (I think it was round about 1999 or 2000 on a redhat box) so I had to spend a fun few hours getting reacquainted with the necessary tools. Unfortunately there is a lot of old and conflicting advice around on the net about how best to do this nowadays – certainly the old “make”, “make_modules install”, “make install” routine doesn’t work these days. And mkinitrd seems long gone….. Unusually, the debian wiki site wasn’t as helpful as I expected but the ubuntu community came good again and the kernel compile advice is pretty good and reasonably up to date. Even here though, there are a couple of mistakes which gave me cause to stop and think. So, as an aide-memoire (largely for myself) I documented the steps I followed to get a kernel build for .deb installation images.

For my kernel build I took the latest available stable kernel from kernel.org (2.6.29.1) and used as a starting point the kernel config from jaunty-nr (i.e. the config for 2.6.28-11 as shipped by canonical). The standard ubuntu kernel is highly generic (and as I have found, hugely and unnecessarily bloated with unused options, debug code and unnecessary modules. I may now rebuild the kernels on my standard desktops too.) To some extent this bloat is inevitable in a kernel which is aimed at a wide range of target architectures. Canonical have also done an excellent job of ensuring that practically any kernel module you could ask for is available should you plug in that weird USB device or install a PCI card giving some obscure capability that only 5% of users will ever need. But I want a kernel which works for a particular piece of hardware, and works well on that hardware. In particular I want my sony memory sticks to be available!

In configuring my kernel I took out all the obviously unnecessary code – stuff like support for specific hardware not in the AAO (AMD or VIA chips, parallel ports, Dell and Toshiba laptops etc. etc), old and unnecessary networking code (apple, decnet, IPX etc. etc) or network devices (token ring (!) ISDN etc. etc.) sheesh there is some cruft in there. More importantly I made sure that I built pci hotplug as a module (pciehp) and that the memory stick modules (mspro_block, jmb38x_ms and memstick) were built correctly. I also checked my config against sickboy’s (to whom I am indebted for some pointers when I was unsure of what to leave out). Sickboy has been quite brutal in excluding support for some devices I feel might be useful (largely in attached USB systems) so we differ. I feel that my kernel is still too large and could do with some pruning. But I’ve been conservative where I’m not sure of the impact of stripping out code.

My kernel now works and supports all the hardware in the AAO (well, my AAO as specified above). The only modifications required to get pci hotplug working are:

– add “pciehp” (without the quotes) to the end of /etc/modules to get the module to load.

– create a new file in /etc/modprobe.d (I called mine acer-aspire.conf) to include the line “options pciehp pciehp_force=1” (again without the quotes).

This means that both card readers work and are hot pluggable as are all the USB ports. Wireless works fine, and it restarts after hibernate/suspend. Audio and the webcam also both work fine (though the internal microphone is still crackly, I get better results from the external mike) . In general I’m fairly happy with the kernel build. I have posted copies of the .deb kernel image and headers here along with my config if anyone wants to try it out. I’d be grateful for any and all feedback on how it works with various other AAO configs. You can email about this at “acer[at]baldric.net“. I think the kernel should work on any AAO running debian lenny or an ubuntu distro of 8.04 or later vintage (but obviously I haven’t tested that fully). Potential users should note that both my kernel and sickboy’s omit support for IPV6 amongst other things. If you find you have a particular problem (say a missing kernel module when you plug in a USB gadget), please check the config file first. It is almost certain that I will have omitted module support for your okey-cokey 2000 USB widget.

To install the downloaded kernel package, either open it with the gdebi package installer or, from the command line, type:

dpkg -i /path/to/dowlnload/linux-image-2.6.29.1-baldric-0.7_2.6.29.1-baldric-0.7-10.00.Custom_i386.deb

(where /path/to/download/ obviously is the path to the directory containing the kernel package).

The package installer will modify your /boot/grub/menu.lst appropriately to allow you to select the new kernel. By default, your machine will boot into the new kernel so you may wish to modify the grub menu to allow you to choose which kernel to boot. I suggest you ensure that “##hiddenmenu” is actually commented out and that you set timeout to a minimum of 3 seconds to give you a chance to choose the appropriate kernel.

I have made every effort to ensure that the kernel works as described, but as always, caveat emptor. If it breaks, you get to keep the pieces. So you really need to enure that you can boot back into a known good kernel before you boot into mine.

Enjoy.

I would be particularly grateful to hear from anyone who has managed to get sony memory sticks to work. Despite everything I have tried (all the modules load correctly) the bloody things still don’t work on my machine.

Permanent link to this article: https://baldric.net/2009/04/12/acer-aspire-one-a-netbook-experience/