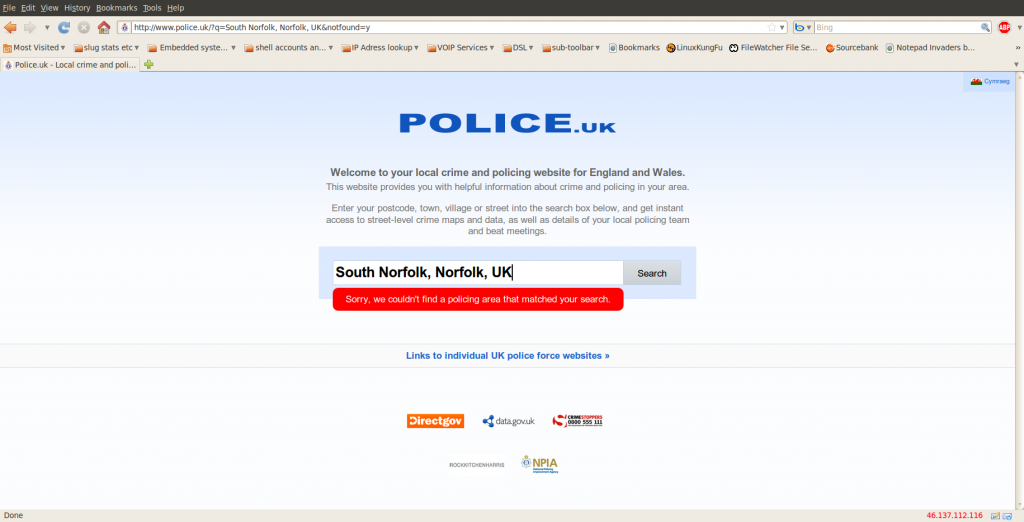

The UK Home Office launched a new crime statistics website today at www.police.uk. The site is supposed to show “Local crime and policing information for England and Wales”.

I’m not entirely convinced of the merit of the site in the first place (and can see all sorts of potential objections arising in some of the more rabid tabloid newspapers), but I thought I would try it out before making any form of judgement of my own. Unfortunately I’m not impressed.

The opening page of the new service invites the user to “Enter your postcode, town, village or street into the search box below, and get instant access to street-level crime maps and data, as well as details of your local policing team and beat meetings.”

I have tried various combinations of the suggestions, scaling outwards and upwards from my precise postcode to the whole of that part of the County in which I live. I was not reassured to get the following message:

Discussion elsewhere on the ‘net suggests that this result is not unusual. It appears to be a badly worded (or badly coded) response to an error condition resulting from system overload following the launch. At least I sincerely hope that is the case and we are not really completely devoid of policing services in the whole of South Norfolk.

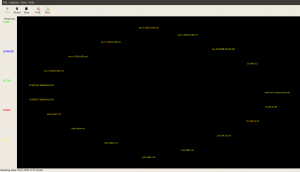

Examination of the HTML source for the webpage generated suggests that the service is running on Amazon’s Web Services. Certainly some of pages are retrieved from S3 servers, and the IP address of the site appears to be on Amazon’s AWS (see dig and whois results below *). If the site is, as it appears to be, cloud based, then either the supplier (Rock Kitchen Harris, Leicester) or the Home Office has seriously undersized the requirement. Regardless of who is at fault here, there is an evident need to pull in some more resource pretty quickly. This should be a good test of the much vaunted flexibility of cloud based services such as Amazon’s EC2. I expect the service to be running quickly and cleanly by this time tomorrow.

* dig www.police.uk returns:

; <<>> DiG 9.6-ESV-R3 <<>> www.police.uk

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 10557 ;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 0 ;; QUESTION SECTION: ;www.police.uk. IN A ;; ANSWER SECTION: www.police.uk. 1251 IN CNAME policeuk-167782603.eu-west-1.elb.amazonaws.com. policeuk-167782603.eu-west-1.elb.amazonaws.com. 60 IN A 46.137.113.146 ;; Query time: 268 msec ;; SERVER: 80.68.80.24#53(80.68.80.24) ;; WHEN: Tue Feb 1 14:16:23 2011 ;; MSG SIZE rcvd: 107

and a whois lookup of 46.137.113.146 returns:

% Information related to ‘46.137.0.0 – 46.137.127.255’

inetnum: 46.137.0.0 – 46.137.127.255

netname: AMAZON-EU-AWS

descr: Amazon Web Services, Elastic Compute Cloud, EC2, EU