The revelations of the past week or so have been interesting to me more for what they haven’t said, than what they have. There are a few points arising from Snowden’s story which puzzle me and which don’t seem to have been addressed by the mainstream media – at least not the ones I read.

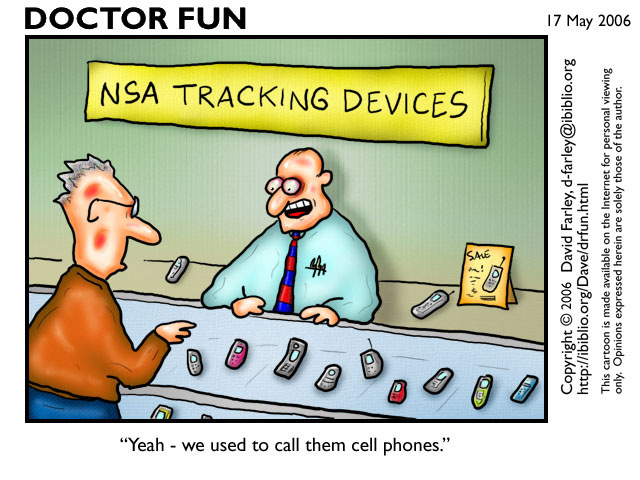

And there is more than a whiff of Captain Renault in the reaction in some quarters to the story. “I’m shocked, shocked, to find that the NSA is spying on the internet.” No shit Sherlock. What do you think they do? That’s their job. Go and read their publicly avowed Mission Statement. Their vision is “Global Cryptologic Dominance through Responsive Presence and Network Advantage.” Key words – “Global” “Network Advantage”. Nor should anyone be surprised to learn that the NSA and GCHQ share intelligence. The two Agencies are extremely close and have been ever since the UKUSA agreements initially forged in 1943, 1946 and 1948. Richard J Aldrich devotes a whole chapter of his book on GCHQ to the UKUSA agreements. That chapter quotes Admiral Andrew Cunningham (Chief of the Naval Staff in 1945) as saying “Much discussion about 100% cooperation with the USA about SIGINT. Decided that less than 100% cooperation was not worth having.” David Omand said in his Guardian article of 11 June that he was “delighted at this evidence that our transatlantic co-operation extends in this hi-tech way into the 21st century, when so much communication is carried on the internet.” (Though he couldn’t resist the opportunity to plug a greater UK intercept capability as proposed by the draft Communications Data Bill, saying “It would be good, nevertheless, if the UK security authorities were able to identify directly themselves more of the traffic of terrorists and serious criminals that threaten us, and I hope amended interception legislation will be presented to parliament soon.”)

And again, nor should anyone be surprised that the NSA has close links with major internet focused companies such as Google, Microsoft and Facebook. Think about it. Facebook alone is a spook’s wet dream. Google has vast amounts of information about its users. If you use an android smartphone, that information also includes real-time geo-location information.

Of course NSA are going to seek access to that resource – the only question is how they will do that.

All the companies named in the initial Guardian articles denied that they allowed access in the way claimed by Snowden. But here again, don’t be surprised by such denial. It seems highly probable to me that any US company asked such questions would be likely to deny that they had done anything illegal. And bear in mind that any company served a “National Security Letter” (as is used primarily by the FBI seeking information on individuals from banks, internet and telecommunication companies etc.) is prohibited by law from telling anyone about it. Inevitably then those companies are going to be a little reticent when quizzed by UK journalists about their relationships with any intelligence agency. Furthermore. as Duncan Campbell says in his Register article, it is hardly surprising that all nine companies deny they have ever heard of PRISM. Why should they have heard of it if that is simply the internal classified name for the program?

Snowden strikes me as intelligent, rational, thoughtful, resourceful, and, within the framework of his apparent view of the world, probably well meaning. Certainly he has managed to achieve one of his proclaimed objectives in that he has provoked discussion of how Intelligence Agencies should act in today’s networked world. Whether you think he is traitor or hero will depend on your own personal take on what a mature democracy should be prepared to do in the name of protecting its citizens. Personally I welcome the debate but regret the way it has been initiated.

As I said above, some aspects of this story puzzle me. Snowden is, or rather was, reportedly a sysadmin working for Booz Allen Hamilton on contract to NSA. (He calls himself an “Infrastructure Analyst” but lists a series of roles which seem to be focused on network and systems management). Moreover, he had only been working for Booz Allen for a short time, having previously been employed by Dell (though still working at the Agency). He obviously had TS clearance, and as a sysadmin would have had extensive access to NSA infrastructuure and assets. Now I’ve been a sysadmin and the view such an administrator has of IT systems is very different to the view provided to users of those systems. The sysadmin’s view will be dominated by access to logs, audit systems, monitoring and control systems, backup and recovery systems, file and device management systems, security controls and so on. The sysadmin’s tools will not normally provide a view of the data held on the systems in quite the same way as that provided to the system’s users. A system user on the other hand will have a desktop providing access to the applications he or she needs to do his or her job. Those applications will give a view of the data which makes sense in the context of that person’s job.

In an organisation like the NSA, I would expect strict role based access control systems to be in place. I would also expect there to be extensive auditing and monitoring systems enforcing those controls. At a trivial level, any user without authority to use a particular system (or see the data it holds) would not even be provided with the desktop tools to allow them to even know the system exists. Where they do have access to a particular system, that access should be granular with a strict need to know ruleset applied. Furthermore, any attempted access to systems by persons without the privilege granted by the need to know should set off all sorts of alarms and should result in that person having an interesting discussion with either their line management or internal security. The same principle should apply to those staff with the most privileged access – the system administrators. Whilst a sysadmin on a TS system may need to have fairly extensive low level access to the operating system and file structure, there is no need for that admin to have the same kind of view of the data on that system as is provided to say an intelligence analyst. So whilst the admin may be able to copy, move, delete, backup, recover files etc, he or she has no need to see the contents of those files in the way the system user does. Snowden says in his interview that as a sysadmin he saw so much, and so much more than a normal user would over the course of his or her career that he felt compelled to expose what the NSA was doing. I find that odd.

To give an overly simplistic example, an analyst may use a system which allows him or her to compare high resolution photographic images over time. The sysadmin managing that system may not need routine access to the application which renders those images on screen in quite the same way. So any attempt by the admin to look at the data in the same way as the analyst does should be an auditable event which should be logged. And any and all attempts to copy such data should similarly be an auditable event. Moreover, the staff monitoring the audit controls should be completely separate from the administration team. On a highly secured system it should not be possible for a sysadmin to scan though files and copy them without someone somewhere being alerted to that fact and them then asking questions. Furthermore, the systems available to the sysadmin should not even provide the capability for off-line copies to be made. Snowden reportedly copied the files he has released to a USB memory stick. I assume that USB memory sticks (or any other portable removable media) are not permitted on NSA premises, but since Bradley Manning seemed to use a similar device to remove files from US Defence systems I think we can assume that the policy is a little lax. I confess to being surprised that the systems in use even provide USB access. But since it seems that they do, then I would expect that any and all USB device insertions would be auditable events with a high alerting level. It seems they weren’t – or at least no-one followed them up.

This is made even more puzzling in my view by the fact that Snowden professed in his interview to have complained openly in the past about his view that what he was seeing constituted “abuses” of power and “wrongdoing”, He even said “the more you talk about this, the more you are ignored”. So we have here an individual who cares deeply about democratic accountability, who is openly critical of what he calls abuses of power and who has TS clearance and system level access to highly classified systems which do not seem to prevent off-line copying and which furthermore do not seem to have any meaningful auditing in place. That says something interesting about the NSA’s security policies and vetting procedures. Given that he has only recently been recruited by Booz Allen Hamilton and that in the process he seems to have retained his TS clearance, that also says something about the NSA’s attitude towards its outside partners’ processes.

What I also find puzzling is that Snowden apparently told his management that he was taking some leave (for treatment for recently diagnosed eplilepsy) and then simply boarded a plane for Hongkong where he met the journalist he had previously contacted over a “secure route” and gave his interview.

That series of events – poor vetting, lax security policies, poor audit and control, and failure to spot a nascent whistleblower’s contact with journalists, sits oddly with the assertion that the NSA is all seeing and all powerful and must make some people feel very uncomfortable. On the other hand, it may make some others feel very much more relaxed.

I look forward to the story developing further.