Well, I have cast my vote. Let’s hope we get the result we need.

Permanent link to this article: https://baldric.net/2010/05/06/this-is-a-politics-free-zone/

May 03 2010

email address images

Adding valid email addresses to web sites is almost always a bad idea these days. Automated ‘bots routinely scan web servers and harvest email addresses for sale to spammers and scammers. And in some cases, email addresses harvested from commercial web sites can be used in targetted social engineering attacks. So, posting your email address to a website in a way which is useful to human being, but not to a ‘bot has to be a “good thing” (TM). One way of doing so is to use an image of an address rather than text itself. Of course this has the disadvantage that the address will not be immediately usable by client email software (unless, of course you defeat the object of the exercise by adding an html “mailto” tag to the image) but it should be no big deal for someone who wants to contact you to write the address down.

There are a number of web sites which offer a (free) service which allows you to plug in an email address and then download an image generated from that address. However, I can’t get over the suspicion that this would be an ideal way to actually harvest valid email addresses, moreover addresses which you could be pretty certain the users did not want exposed to spammers. Call me paranoid, but I prefer to control my own privacy.

There are also a number of web sites (and blog entries) describing how to use netpbm tools to create an image from text – one of the better ones (despite its idiosyncratic look) is at robsworld. But in fact it is pretty easy to do this in gimp. Take a look at the address below:

This was created as follows:

open gimp and create a new file with a 640×480 template (actually any template will do);

select the text tool and choose a suitable font size, colour etc;

enter the text of the address in the new file;

select image -> autocrop image;

select layer -> Transparency -> Colour to Alpha;

select from white (the background colour) to alpha;

select save-as and use the file extension .png – you will be prompted to export as png.

Now add the image to your web site.

Permanent link to this article: https://baldric.net/2010/05/03/email-address-images/

May 02 2010

ubuntu 10.04 – minor, and some not so minor, irritations

If and when the teething problems in 10.04 are fixed and the distro looks stable enough to supplant my current preferred version, I will be faced with one or two usability issues. In this version, canonical have taken some design decisions which seem to have some of the fanbois frothing at the mouth. The most obvious change in the new “light” theme applied is the move of the window control buttons from the top right to the top left (a la Mac OSX). Personally I don’t find this a problem, but it seems to have started all sorts of religious wars and has apparently even resulted in Mark Shuttleworth being branded as a despot because he had the temerity to suggest that the ubuntu community was not a democracy. Design decisions are taken by the build team, not by polling the views of the great unwashed. In my view that is how it should be. The great beauty of the free software movement is the flexiibility and freedom it gives its users to change anything they don’t like. Hell, you can even build your own linux distro if you don’t like any of the (multiple) offerings available. Complaining about a design decision in one distro simply means that the complainant hasn’t understood the design process, and further, probably doesn’t understand that if he or she doesn’t like it, then they are perfectly free to change that decision on their own implementation.

In fact, it is pretty easy to change the button layout. To do so, simply run “gconf-editor” then select apps -> metacity -> general from the left hand menu. Now highlight the button_layout attribute and change the entry as follows:

change

close,minimize,maximize:

to

:minimize,maximize,close

i.e. move the colon from the right hand end of the line to the left and relocate the close button to the outside. Bingo, your buttons are now back where god ordained they should be and all is right in the universe.

Presentation issues aside, there are some more fundamental design issues which are indicative of a worrying trend. As I noted in the post below, it is now pretty easy to install restricted codecs as and when they are needed. Rhythmbox will happily pull in the codecs needed to play MP3 encoded music with only a minor acknowledgement that the codecs have been deliberately omitted from the shipped distribution for a reason – the format is closed and patent encumbered. Most users won’t care about the implications here, but I think it is only right that they should know the implications of using a closed format before accepting it. It is also worth bearing in mind that some software (including that necessary to watch commercial DVDs) is deliberately not shipped because the legal implications of doing so are problematic in many countries.

So, whilst from a usability perspective, I may applaud the decisions which have made it easy for the less technically savvy users to get their multimedia installations up and running with minimal difficulty, I find myself more than a little unhappy with the implications.

But it gets worse. Enter ubuntu one.

Ubuntu one attempts to do for ubuntu what iTunes does for Apple (but without the DRM one hopes….). The new service is integrated with rhythmbox and allows users to search for and then pay for music on-line. The big problem here is that the music is all encoded in MP3 format when ubuntu, as a champion of free software, could have chosen the (technically superior) patent free ogg vorbis format. The choice smacks of business “realpolitick” in a way that I find disappointing from a company like Canonical. Compare and contrast this approach with the strictly free and open stance taken by Debian and you have to wonder where Canonical is going.

Watch this space. If they introduce DRM in any form there will be an unholy row.

Permanent link to this article: https://baldric.net/2010/05/02/ubuntu-10-04-minor-and-some-not-so-minor-irritations/

May 02 2010

ubuntu 10.04 problems

The lastest LTS version of ubuntu (10.04, or lucid lynx according to your naming preferences) was released to an eagerly waiting public on 29 April. Long term support (LTS) versions are supported for three years on the desktop and five years on the server instead of the usual 18 months for the normal releases. My current desktop of choice is 8.04 (the previous LTS version) and I will probably move to 10.04 eventually. But not yet.

A wet and windy bank holiday weekend (as this is) meant that my plans to go fishing were put on hold so I downloaded the 10.04 .isos to play with. I grabbed three versions, the 32 and 64 bit desktops and the netbook-remix version. Given that this was a mere day after the release date, I expected a slow response from the mirrors, but I was pleasantly surprised by the download speeds I obtained. Canonical must have put a lot of effort into getting a good range of fast mirrors. The longest download took just over 22 minutes and the fastest came down in just 14 minutes.

I copied the netbook-remix .iso to a USB stick using unetbootin on my 8.04 desktop (later versions of ubuntu ship with a usb startup disk creator) and installed to my AAO netbook with no hitches whatever. The new theme ditches the bright orange (or worse, brown) colour scheme used in earlier versions of ubuntu and looks attractive and professional.

I spent a short while adding some of my preferred tools and applications and configuring the new installation to handle my multimedia requirements, but all this is now remarkably easy. Even playback of restricted formats (MP3 or AAC audio for example) is eased by the fact that totem (or rhythmbox) will fetch the required codecs for you when first you attempt to play a file which needs them. So, pleasant and easy to use. But I /still/ can’t get sony memory sticks to work.

But the netbook is simply a (mobile) toy. I do not rely upon it as I do my desktop. Any data on the netbook is ephemeral and (usually) a copy of the same data held elsewhere, either on a server in the case of email, or my main desktop. It would not matter if my installation had trashed the netbook, but my desktop is far more important. It has taken me a long time to get that environment working exactly the way I want it, and there is no way I will update it without a lot of testing first.

I am lucky enough to have a plenty of spare kit around to play with though and I normally test any distro I like the look of in a virtual machine on an old 3.4 GHz dual core pentium 4 I have. Until this weekend, that box was running a 64 bit installation of ubuntu 9.04 with virtualbox installed for testing purposes. Running a new distro in a virtual machine is normally good enough to give me a feel for whether I would be happy using that distro long term – but it does have some limitations and I really wanted to test 10.04 with full access to the underlying hardware so I decided to wipe the test box and install the 64 bit download. If it worked I could then re-install virtualbox and use the new base system as my test rig in future. If it failed, then all I have lost is some time on a wet weekend. It failed.

To be fair, the installation actually worked pretty well. My problems arose when I started testing my multimedia requirements. I installed all the necessary codecs and libraries (along with libdecss, mencoder, vlc, flash plugins etc, etc) to allow me to waste time watching youtube, MP4 videos and DVDs only to discover that neither of the DVD/CD devices in my test box were recognised. I could not mount any optical medium. This is a big problem for me because I encode my DVDs to MP4 format so that I can watch them on my PSP on the train. Thinking that there might be a problem with the automounter, I tried manually mounting the devices – no go, mount failed consistently because it could not find any media. I could not find any useful messages in any of the logs so I checked the ubuntu forums to see if others were having any similar problems. Yep – I’m not alone. This is a common problem. But it seems that I’m pretty lucky not to have seen a lot more problems (black, or purple, screen of death seems to be a major complaint). I think I’ll wait a month or so before trying again.

Meanwhile, I guess I can always ask for my money back.

Permanent link to this article: https://baldric.net/2010/05/02/ubuntu-10-04-problems/

Apr 18 2010

where are you

I have added a new widget to trivia – a map of the world from clustrmaps which gives a small graphic depicting where in the world the IP addresses associated with readers are supposedly located. Geo location of IP addresses is not a perfect art, but the map given corresponds roughly with what I expect from my logs.

I’ve checked the clustrmaps privacy statement and am reasonably content that neither my, nor (more importantly) your, privacy is compromised by this addition any more than it would be if I linked to any other site. Clustrmaps logs no more information about your visit to trivia than do I.

Besides, the map is pretty.

Permanent link to this article: https://baldric.net/2010/04/18/where-are-you/

Permanent link to this article: https://baldric.net/2010/04/02/there-are-10-kinds-of-people-in-the-world/

Mar 31 2010

webDAV in lighttpd on debian

I back up all my critical files to one of my slugs using rsync over ssh (and just because I am really cautious I back that slug up to another NAS). Most of the files I care about are the obvious photos of friends and family. I guess that most people these days will have large collections of jpeg files on their hard disks whereas previous generations (including myself I might add) would have old shoe boxes filled with photographs.

The old shoe box approach has much to recommend it. Not least the fact that anyone can open that box and browse the photo collection without having to think about user ids or passwords, or having to search for some way of reading the medium holding the photograph. I sometimes worry about how future generations’ lives will be subtly impoverished by the loss of the serendipity of discovery of said old shoe box in the attic. Somehow the idea of the discovery of a box of old DVDs doesn’t feel as if it will give the discoverer the immediate sense of delight which can follow from opening a long forgotten photo album. Old photographs feel “real” to me in a way that digital images will never do. In fact the same problem affects other media these days. I am old enough (and sentimental enough) to have a stash of letters from friends and family past. These days I communicate almost exclusively via email and SMS. And I feel that I have lost some part of me when I lose some old messages in the transfer from one ‘phone to another or from one computing environment to another.

In order to preserve some of the more important photographs in my collection I print them and store them in old fashioned albums. But that still leaves me with a huge number of (often similar) photographs in digital form on my PC’s disk. As I said, I back those up regularly, but my wife pointed out to me that only I really know where those photos are, and moreover, they are on media which are password protected. What happens if I fall under a bus tomorrow? She can’t open the shoebox.

Now given that all the photos are on a networked device, it is trivially easy to give her access to those photos from her PC. But I don’t like samba, and NFS feels like overkill when all she wants is read-only access to a directory full of jpegs. The slug is already running a web server and her gnome desktop conveniently offers the capability to connect to a remote server over a variety of different protocols, including the rather simple option of WebDAV. So all I had to do was configure lighty on the slug to give her that access.

Here’s how:-

If not already installed, then run aptitude to install “lighttpd-mod-webdav”. If you want to use basic authenticated access then it may also be useful to install “apache2-utils” which will give you the apache htpasswd utility. The htpasswd authentication function uses unix crypt and is pretty weak, but we are really only concerned here with limiting browsing of the directory to local users on the local network, and if someone has access to my htpasswd file then I’ve got bigger problems than just worrying about them browsing my photos. There are other authentication mechanisms we can use in lighty if we really care – although I would argue that if you really want to protect access to a network resource you shouldn’t be providing web access in the first place.

To enable the webdav and auth modules, you can simply run “lighty-enable-mod webdav” and “lighty-enable-mod auth” (which actually just create symlinks from the relevant files in /etc/lighttpd/conf-available to /etc/lighttpd/conf-enabled directories) or you can activate the modules directly in /etc/lighttpd/lighttpd.conf or the appropriate virtual-host configuration file with the directives:

server.modules += ( “mod_auth” )

server.modules += ( “mod_webdav” )

lighty is pretty flexible and doesn’t really care where you activate the modules. The advantage of using the “lighty-enable-mod” approach however, is that it allows you to quickly change by running “lighty-disable-mod whatever” at a future date. The symlink will then be removed and so long as you remember to restart lighty, the module activation will cease.

Now to enable the webdav access to the directory in question we need to configure the virtual host along the following lines:

$HTTP[“host”] == “slug” {

# turn off directory listing (assuming that it is on by default elsewhere)

server.dir-listing = “disable”# turn on webdav on the directory we wish to share

$HTTP[“url”] =~ “^/photos($|/)” {

webdav.activate = “enable”

webdav.is-readonly = “enable”

webdav.sqlite-db-name = “/var/run/lighttpd/lighttpd.webdav_lock.db”

auth.backend = “htpasswd”

auth.backend.htpasswd.userfile = “/etc/lighttpd/htpasswd”

auth.require = ( “” => ( “method” => “basic”,

“realm” => “photos”,

“require” => “valid-user” ) )

}}

Note that the above configuration will allow any named user listed in the file /etc/lighttpd/htpasswd read only access to the “/photos” directory on the virtual host called “slug” if they can sucessfully authenticate. Note also, however, that because directory listing is turned off, it will not be possible for that user to access this directory with a web browser (which would be possible if listing were allowed). Happily however, the gnome desktop (“Connect to server” mechanism) will still permit authenticated access and once connected will provide full read only access to all files and subdirectories of “/photos” in the file browser (which is what we want). The “auth.backend” directive tells lighty that we are using the apache htpasswd authentication mechanism and the file can be found at the location specified (make sure this is outside webspace). the “auth.require” directives specify the authentication method (bear in mind that “basic” is clear text, albeit base64 encoded, so authenticatiion credentials can be trivially sniffed off the network); the “realm” is a string which will be displayed in the dialogue box presented to the user (though it has additional functions if digest authentication is used); “require” specifies which authenticated users are allowed access. This can be useful if you have a single htpasswd file for multiple virtual hosts (or directories) and you wish to limit access to certain users.

Passing authentication credentials in clear over a hostile network is not smart, so I would not expose this sort of configuration to the wider internet. However, the webDAV protocol supports ssl encryption so it would be easy to secure the above configuration by changing the setup to use lighty configured for ssl. I choose not to here because of the overhead that would impose on the slug when passing large photographic images – and I trust my home network……

Now given that we have specified a password file called “htpasswd” we need to create that file in the “/etc/lighttpd” configuration directory thusly:

httpasswd -cm /etc/lighttpd/htpasswd user

The -c switch to htpasswd creates the file if it does not already exist. When adding any subsequent users you should omit this switch or the file will be recreated (and thus overwritten). The “user” name adds the named user to the file. You will be prompted for the password for that user after running the command. As I mentioned above, by default the password will only be encrypted by crypt, the -m switch used forces htpasswd to use (the stronger) MD5 hash. Note that htpasswd also gives the option of SHA (-s switch) but this is not supported by lighty in htpasswd authentication. Make sure that the passwd file is owned by root and is not writeable by non root users (“chown root:root htpasswd; chmod 644 htpasswd” if necessary).

The above configuration also assumes that the files you wish to share are actually in the directory called “photos” in the web root of the virtual host called “slug”. In my case this is not true because I have all my backup files outside the webspace on the slug (for fairly obvious reasons). In order to share the files we simply need to provide a symlink to the relevant directory outside webspace.

Now whenever the backup is updated, my wife has access to the latest copy of all the photos in the shoebox. But let’s hope I don’t fall under a bus just yet.

Permanent link to this article: https://baldric.net/2010/03/31/webdav-in-lighttpd-on-debian/

Mar 30 2010

what a user agent says about you

I get lots of odd connections to my servers – particularly to my tor relay. Mostly my firewalls bin the rubbish but my web server logs still show all sorts of junk. Occasionally I get interested (or possibly bored) enough to do more than just scan the logs and I follow up the connection traces which look really unusual. I may get around to posting an analysis of all my logs one day.

One of the interesting traces in web logs in the user agent string. Mostly this just shows the client’s browser details (or maybe proxy details) but often I find that the UA string is the signature of a known ‘bot (e.g. Yandex/1.01.001). A good site for keeping tabs on ‘bot signatures is www.botsvsbrowsers.com. But I also find user-agent-string.info useful as a quick reference. If you have never checked before, it can be instructive to learn just how much information you leave about yourself on websites you visit. Just click on “Analyze my UA” (apologies for the spelling, they are probably american) for a full breakdown of your client system.

Permanent link to this article: https://baldric.net/2010/03/30/what-a-user-agent-says-about-you/

Mar 30 2010

unplugged

My earlier problems with the sheevaplug all seem to have stemmed from the fact that I had installed Lenny to SDHC cards. As I mentioned in my post of 7 March, I burned through two cards before eventually giving up and trying a new installation to USB disk. This seems to have fixed the problem and my plug is now stable. I had a series of problems with the SD cards I used (class 4 SDHC 8 GB cards) which may have been related to the quality of the cards I used. Firstly the root filesystem would often appear as readonly and the USB drive holding my apt-mirror (mounted as /home2) would similarly appear to be mounted read-only. This seemed to occur about every other day and suggested to me that the plug had seen a problem of some kind and rebooted. But of course since the filesystem was not writeable, there were no logs available to help my investigations.

I persevered for around two weeks during which time I completely rebuilt both the original SD card and another with Martin’s tarball, reflashed uboot with the latest from his site, and reset the uboot environment to the factory defaults before trying again. I also changed /etc/fstab to take out the “errors=remount-ro” entry against the root filesystem, and reduced the number of writes to the card by adding “noatime, commit=180” in the hope that I could a) gain stability, and b) find out what was going wrong. No joy. I still came home to a plug with a /home2 that was either unmounted or completely unreadable or mounted RO. The disk checked out fine on another machine and I could find nothing obvious in the logs to suggest why the damned thing was failing in the first place. Martin’s site says that “USB support in u-boot is quite flaky”. My view is somewhat stronger than that, particularly when the plug boots from another device and then attaches a USB disk.

But I don’t give up easily. After getting nowhere with the SDHC card installation from Martin’s tarball, I reset the uboot environment on the plug to the factory default (again) and then ran a network installation of squeeze to a 1TB USB disk (following Martin’s howto). It took me two attempts (I hit the bug in the partitioner on the first installation) but I now have a stable plug running squeeze. It is worth noting here that I had to modify the uboot “bootcmd” environment variable to include a reset (as Martin suggests may be necessary) so that the plug will continue to retry after a boot failure until it eventually loads. The relevant line should read:

setenv bootcmd ‘setenv bootargs $(bootargs_console); run bootcmd_usb; bootm 0x00800000 0x01100000; reset’

The plug now boots successfully every second or third attempt. So far it has been up just over ten days now without any of the earlier problems recurring.

My experience appears not to be all that unusual. There has been some considerable discussion on the debian-arm list of late about problems with installation to SDHC cards. Most commentators conclude that wear levelling on the cards (particularly cheap ones) may not be very good. SD cards are sold formatted as FAT or FAT32 (depending on the capacity of the card). Modern journalling filesystems such as ext3 on linux result in much higher read/write rates and the quality of the cards becomes a much greater concern. Perhaps my cards just weren’t good enough.

Permanent link to this article: https://baldric.net/2010/03/30/unplugged/

Mar 21 2010

psp video revisited

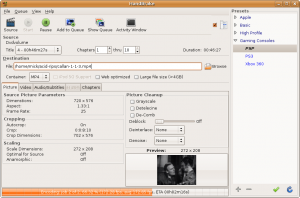

I last posted about ripping DVDs to PSP format back in November 2007. Since then I have used a variety of different mechanisms to transcode my DVDs to the MP4 format preferred by my PSP. A couple of years ago I experimented with both winff and a command line front end to ffmpeg called handbrake. Neither were really as successful as I would have liked (though winff has improved over the past few years) so I usually fell back to the mencoder script that works for 95% of all the DVDs I buy.

I have continually upgraded the firmware on my PSP since 2007 so that I am now running version 6.20 (the latest as at today’s date). Somewhere between version 3.72 and now, sony decided to stop being so bloody minded about the format of video they were prepared to allow to run on the PSP. We are still effectively limited to mpeg-4/h.264 video wth AAC audio in an mp4 container, but the range of encoding bitrates and video resolutions is no longer as strictly limited as it was back in late 2007. So when going about converting all the DVDs I received for christmas and my last birthday and considering whether I should I move my viewing habits to take advantage of the power of my N900, I recently revisited my transcoding options.

Despite the attractiveness of the N900’s media player I concluded that it still makes sense to use the PSP for several reasons:- it works; the battery lasts for around 7 hours between charges; I have a huge investment in videos encoded to run on it; and most importantly, not using the the N900 as intensively as I use the PSP means that I know that my ‘phone will be charged enough to use as a ‘phone should I need it.

But whilst revisiting my options I discovered that the latest version of handbrake (0.9.4) now has a rather nice GUI and it will rip and encode to formats usable by both the PSP and a variety of other hand-held devices (notably apple’s iphone and ipod thingies) quite quickly and efficiently. Unfortunately for me, the latest version is only available as a .deb for ubuntu 9.10 and I am still using 8.04 LTS (because it suits me). A quick search for alternative builds led me to the ppa site for handbrake which gives builds up to version 0.9.3 for my version of ubuntu. See below:

This version works so well on my system that I no longer have to use my mencoder script.

Permanent link to this article: https://baldric.net/2010/03/21/psp-video-revisited/

Mar 15 2010

16 is so much safer than 10

I am indebted to a colleague of mine (thank you David) for drawing this to my attention.

The Symantec Norton SystemWorks 2002 Professional Edition manual says:

“About hexadecimal values – Wipe Info uses hexadecimal values to wipe files. This provides more security than wiping with decimal values.”

I suppose octal or (worse) binary, just won’t cut the mustard.

Permanent link to this article: https://baldric.net/2010/03/15/16-is-so-much-safer-than-10/

Mar 07 2010

plug instability

I’m still having a variety of problems with my sheevaplug. Not least of which is the fact that SDHC cards don’t seem to be the best choice of boot medium. I have had failures with two cards now and some searching of the various on-line fora suggests that I am not alone here. In particular, SD cards seem to suffer badly under the read/write load that is routine for an OS writing log files – let alone one running a file or web server. I have also had several failures with my external USB drive. It seems that the plug boots too quickly for the USB subsystem to initialise properly. This means that there is not enough time for the relevant device file (/dev/sda1 in my case) to appear before /etc/fstab is read to mount the drive. A posting on the plugcomputer.org forum suggested a useful workaround (essentially introducing a wait), but even that was only partially sucessful. Sometimes it worked, sometimes it didn’t. In fact, the USB drive still often fails after a random (and short) time and then remounts read-only. Attempts to then remount the drive manually (after a umount) result in failure with the error message “mount: special device /dev/sda1 does not exist”.

In my attempts to cure both the booting problems and the USB connection failures I have installed the latest uboot (3.4.27 with pingtoo patches linked to from Martin’s site) and updated my lenny kernel to Martin’s 2.6.32-2-kirkwood in the (vain as it turns out) hope that the latest software would help. Here I also discovered another annoying problem – installing the latest kernel does not result in a new kernel image, the plug still boots into the old kernel until you run “flash-kernel”. Fortunately this is reasonably well known and is covered in Martin’s troubleshooting page.

I will persevere for perhaps another week with the current plug configuration. If I can’t get a stable system though I will try installing to USB drive (perverse as that may seem) and changing the uboot to boot from that rather than the flaky SD card. Most on-line advice suggests that USB support in uboot is rather “immature”, but it can’t be any worse than the current setup. My thinking is that if I can introduce a delay in the boot process by uboot so that I can successfully boot from an external HDD, the drive connection might then be stable enough to be usable.

Of course I could be completely wrong.

Permanent link to this article: https://baldric.net/2010/03/07/plug-instability/

Feb 28 2010

from slug to plug

Well this took rather longer than expected. I intended to write about my latest toy much earlier than this, but several things got in the way – more of which later.

About three or four weeks ago I bought myself a new sheevaplug.

The plug has been on sale in the US for some time, but UK shipping costs added significantly to $99 US retail price. Recently however, a UK supplier (Newit) has started stocking and selling the plugs over here – and at very good prices too. My plug arrived within three days of order and I can thoroughly recommend Newit. The owner, one Jason King no less (fans of 1970’s TV will recognise the name), kept me informed of progress from the time I placed the order to the time it was shipped. He even took the trouble to email me after shipping to check that I had received it OK. Nice touch, even if it was automated.

Looking much like a standard “wall wart” power supply typically attached to an external disk, the plug is actually quite chunky, but it will still fit comfortably in the palm of your hand. Inside that little box though there is enough computing power to make a slug owner more than happy. The processor is a 1.2 GHz Marvell Kirkwood ARM-compatible device and it is coupled with 512MB SDRAM and 512MB Flash memory. Compare that to the poor old slug’s 266 MHz processor and 32 MB of flash and you can see why I’d be interested – particularly since the plug can run debian (and Martin Michlmayr has again provided a tarball and instructions to help you out.

The plugs come in a variety of flavours, but all offer at least one USB 2.0 port, a mini usb serial port, gigabit ethernet and an SDHC slot. This means that debian (or another debian based OS such as Ubuntu) can be installed either to the internal flash or to one of the external storage media available. Newit ship the plugs in various configurations and will happily sell you a device fully prepared with debian (either Lenny or Squeeze according to your taste) on SD card to go with the standard Ubuntu 9.04 in flash. Personally I chose to install debian myself, so I bought the base model. (No, I’m not a cheapskate, I just prefer to play. Where’s the fun in buying stuff that “just works”?)

Given that Martin’s instructions suggest that installing to USB disk can be problematic, and that I have debian lenny on my slugs (and had a spare 4 Gig SDHC card lying around) I chose to use his tarball to install lenny to my SDHC card. Firstly I formatted the card (via a a USB mounted card reader) as below:

/dev/sdb1 512 Meg bootable

/dev/sdb2 2.25 Gig

/dev/sdb3 1024 Meg swap

(note that the plug will see these devices as “/dev/mmcblk0pX” when the card is loaded. The “/dev/sdbX” layout simply reflects the fact that I was using a USB mounted card reader on my PC. )

I then downloaded and installed Martin’s lenny tarball to the newly formatted card and as instructed edited the /etc/fstab to match my installation. Martin’s fstab file is below:

# /etc/fstab: static file system information.

#

#proc /proc proc defaults 0 0

# Boot from USB:

/dev/sda2 / ext2 errors=remount-ro 0 1

/dev/sda1 /boot ext2 defaults 0 1

/dev/sda3 none swap sw 0 0

# Boot from SD/MMC:

#/dev/mmcblk0p2 / ext2 errors=remount-ro 0 1

#/dev/mmcblk0p1 boot ext2 defaults 0 1

#/dev/mmcblk0p3 none swap sw 0 0

As you can see it defaults to assuming a USB attached device. You need to comment out the USB boot entries and uncomment the SD/MMC entries if. like me, you are intending to boot from SD card. At this stage I also edited “/etc/network/interfaces” to change the eth0 interface from dhcp to static (to suit my network) and I changed “/etc/resolv.conf” because the default includes references to cyrius.com and a local IP address for DNS.

Before we can boot from the SD card, we have to make a few changes to the uboot boot loader configuration to stop it using the default OS on internal flash (where the factory installed Ubuntu resides). Again, Martin’s instructions are helpful here but he points to the openplug.org wiki for instructions in setting up the necessary serial connection to the plug. On my PC (running Ubuntu 8.04 LTS) I got no ttyUSB devices by default and “modprobe usbserial” did not work but “modprobe ftdi_sio vendor=0x9e88 product=0x9e8f” did work for me.

Now open a TTY session using cu thusly “cu -s 115200 -l /dev/ttyUSB1” – don’t use putty on linux, it doesn’t allow cut and paste which can be very useful if you are following on-line instructions (of course it helps if you cut and paste the right instructions). I found that booting is too fast if you have to switch on the plug and then return to a keyboard so I recommend simply leaving the terminal session open and resetting the plug with a pin or paper clip. Hit any key to interrupt the boot session, then follow Martin’s instructions for editing the uboot environment.

My plug was running v 3.4.16 of uboot, so at first I used version 3.4.27 (downloaded from plugcomputer.org) and loaded that via tftp as described by Martin, But this turmed out to be a mistake because my plug failed to boot thereafter. I got the following error message via the serial console:

## Booting image at 00400000 …

Image Name: Debian kernel

Created: 2009-11-23 17:25:02 UTC

Image Type: ARM Linux Kernel Image (uncompressed)

Data Size: 1820320 Bytes = 1.7 MB

Load Address: 00008000

Entry Point: 00008000

Verifying Checksum … Bad Data CRC

Some searching suggested that the uboot image was probably the problem and that reverting to v3.4.19 would solve this. So I downloaded 3.4.19 from “vioan’s” post “#6 on: November 16, 2009, 03:21:34 PM” at the plugcomputer.org forum and reflashed the plug with that image. Success – my plug now booted into debian lenny. Tidy up, update the OS and add a normal user as recommended and we’re ready to go.

My plug was intended to replace the slug I was using as my local apt-mirror. That mirror is now fairly large because I have a mix of 32 and 64 bit ubuntus (of varying vintages) and 386 and ARM versions of debian. I therefore recycled an unused 500 gig lacie USB disk and mounted that as /home2 (originally as /home, but I soon changed that when I wanted to unmount it frequently and then lost my home directory….) Copying the apt-mirror (175 Gig) over the network from my old slug was clearly going to take forever – high speed networking is not the slug’s forte, so I mounted both the slug and the plug’s disks locally on my PC and copied the files over USB – much faster. It was here that I discovered why the old lacie disk (a “designed by porsche” aluminium coated beast) was lying idle. I’d forgotten that it sounded like a harrier jump jet on take off when in use. I put up with that for a week – just long enough to get me to a free weekend when I could rebuild the old slug (now used as just an NTP server and the webcam) to boot from a 4 gig USB stick so that I could recycle its disk onto the plug. I’ve just finished doing that.

One other problem I found with the plug which caused me much head scratching (and delayed my writing this as I noted above) was that it consistently failed to boot back into my debian install after a “reboot” or “shutdown -r” – I had to power cycle the device to get it to boot properly. I spent some time this weekend with the serial port connected before I noticed (using “printenv” at the uboot prompt) that I had mixed up the uboot environment variables printed on Martin’s site. I had actually copied part of the instructions for the USB boot variant instead of the correct ones for the SD card boot. Sometimes “cut and paste” can be a mistake.

Permanent link to this article: https://baldric.net/2010/02/28/from-slug-to-plug/

Feb 26 2010

homeopathy

I can’t recall how I got there, but this made me laugh enough to want to share it.

Permanent link to this article: https://baldric.net/2010/02/26/homeopathy/

Feb 20 2010

isp shenanigans

I have recently been off-line. And I am less than happy about the reasons.

My ISP recently informed me that it was changing it’s back end provider from Entanet to Vispa. Like many small ISPs, my provider does not have any real infrastructure of its own, it simply repackages services provided by a wholesaler who does have the necessary infrastructure in a process commonly called “whitelabelling”. This whitelabel approach is particularly common amongst providers of webspace and it normally works fine. Amongst the smaller ISPs there are many who are simply Entanet resellers. And until recently Entanet had a good name for pretty solid service. Well not any more.

I had not noticed any particular problems and was slightly surprised to hear from my ISP that they were unhappy with the service they were getting from Entanet. Apparently there had been frequent network outages for many of their customers and so they had chosen a new provider and were notifying their customers of impending moves, Of course this would mean some local configuration changes so customers were advised in advance of those changes and the dates for action. Apart from preparing to change the ADSL login details on my router, in my case I also had to ensure that my SSH and other login details on various external services I have or use were modified to accept the new fixed IP address assigned to my router (I tend to lock down such services so that they only accept connections from my IP address, not foolproof I know, but it all helps).

In the migration advice letter, my ISP advised its customers to set up new direct debit arrangements for Vispa and cancel the existing ones to Entanet. That letter advised that any over or under charge either way during transition would be sorted out between the providers. So I did as I was advised and waited for the big day (approximately 10 days away). Big mistake.

About a week before the date of transition I found my web traffic intercepted and blocked by Entanet with the message “Your account has been blocked. Please contact your internet service provider”. This blockage only occurred on web traffic (my email collection over POP3S and IMAPS continued to work, as did ICMP echo requests and ssh connections out). This action actually pissed me off even more than I would have been if Entanet had completely cut my connection. It also, incidentally, betrayed the fact that they were using a transparent web proxy on the connection – not something that makes me very happy. But simply blocking web traffic was obviously designed to annoy me and make me contact my ISP and strongly suggests to me that Entanet were usnsure of their legal right to cut me off completely. Further, in my view, intercepting my web traffic in this way may actually have been illegal.

Interestingly, even http traffic aimed inbound to my ADSL line (where I run a webcam on one of my slugs) was similarly intercepted as is evidenced by this link from changedetection.com. Obviously, the imposition of the message from Entanet was picked up by changedetection as an actual change to that web page.

So I emailed Entanet and my ISP, pointing out that my contract was with them and not Entanet and told them to sort it out between themselves. I, as a customer, did not expect to be penalised simply because my ISP had decided to change its wholesaler. Meanwhile, I decided to bypass Entanet’s pathetic and hugely irritating web block by tunneling out to a proxy of my own. Of course I could have used my existing tor connection, but that is not always as fast as I would like, particularly at peak web usage hours, so I set up a new proxy on another of my VPSs using tinyproxy, listening on localhost 8118 (the same as privoxy on my tor node). I then set up an ssh listener on my local machine and set firefox to use that listener as its proxy – again, much as I had for tor. Bingo. Stuff you Entanet.

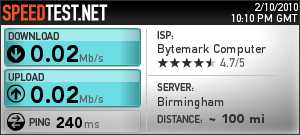

Unfortunately, it did not stop there. Entanet’s rather arrogant response to my email was to insist that I re-establish a direct debit with them for the few days remaining before the changeover (despite them having had my payment in advance for the month in question). No way, so I ignored this request only to find that Entanet then throttled my connection to 0.02 Mb/s – see the speedtest result below.

This sort of speed is just about usable for text only email, but is absolutely useless for much else. Now I had originally been given two separate dates for the changeover by my ISP, so in a fit of over enthusiastic optimism on my part, I tried to convince myself that the earlier (later corrected) date given was the correct one and so I reconfigured my router in the hope it would connect to Vispa, No deal. Worse, when I then tried to fall back to the (pitiful) Entanet connection, I found it blocked completely. I was thus without a connection for some four days (including a very long weekend).

So far my new connection looks good. But apart from my disgust with Entanet, I have not been overly impressed with the support I have received from my ISP during these problems. I’ll keep an eye on things – I may yet move of my own volition.

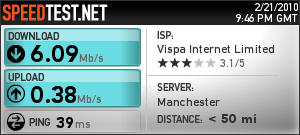

[Addendum] just by way of comparison, the test result below is what I expect my connection speed to look like. Test run at around 21.45 on Sunday 21 February 2010.

That’s a bit better. Note however that this test was direct from Vispa’s network rather than through my ssh tunnel.

Permanent link to this article: https://baldric.net/2010/02/20/isp-shenanigans/

Jan 23 2010

life is too short to use horde

I own a bunch of different domains and run a mail service on all of them. In the past I have used a variety of different ways of providing mail, from simple pop/imap using dovecot and postfix, through to using the database driven mail service in egroupware.

Recently I have consolidated mail for several of my domains onto one of my VPSs. I don’t have a lot of mail users so at first I stuck with the simple approach available to all dovecot/postfix installations, i.e. – using dovecot as the local delivery mechanism and simply telling postfix to hand off incoming mail to dovecot. Dovecot then has to figure out where to deliver mail. I also used a simple password file for the dovecot password mechanism. This mechanism worked fine for a small number of users, but it rapidly becomes a pain if you have multiple users across multiple domains and you wish to allow those users to change their passwords remotely. The solution is to move user management to a MySQL backend and change the postfix and dovecot configurations to use that backend database.

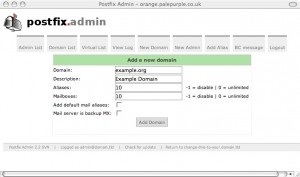

Now to allow (virtual) users to change their mail passwords, most on-line documentation points to the sork password module for horde. But have you /seen/ horde? Sheesh, what a dog’s breakfast of overengineered complexity. I flatter myself that I can find may away around most sysadmin problems. but after most of a day one weekend trying to install and configure the entire horde suite just so that I could use the remote password changing facility I gave up in disgust and went searching for an easier mechanism. Sure enough I found just what I wanted in the shape of postfixadmin. This is a php application which provides a web based interface for managing mailboxes, virtual domains and aliases on a postfix mail server.

Postfixadmin is easy to install and has few dependencies (beyond the obvious php/postfix/mysql). There are even ubuntu/debian packages available for users of those distributions. I also found an excellent installation howto at rimuhosting which I can recommend.

I can now manage all my virtual domains, user mailboxes and aliases from one single point – and the users can manage their passwords and vacation messages from a simple web interface.

Whilst I currently only provide pop3s/imaps mail access through dovecot, postfixadmin offers a squirrelmail plugin to integrate webmail should I wish to do that in future.

Simple, elegant and above all, usable. And it didn’t take all day to install either.

Permanent link to this article: https://baldric.net/2010/01/23/life-is-too-short-to-use-horde/

Jan 22 2010

tor server compromise

According to this post by Roger Dingledine, two tor directory servers were compromised recently. In that post Dingledine said:

In early January we discovered that two of the seven directory authorities were compromised (moria1 and gabelmoo), along with metrics.torproject.org, a new server we’d recently set up to serve metrics data and graphs. The three servers have since been reinstalled with service migrated to other servers.

Whilst the direrctory servers apparently also hosted the tor project’s svn and git source code repositories, Dingledine is confident that the source code has not been tampered with – and nor has there been any possible compromise of user anonymity. Neverthless, the project recommends that tor users and operators upgrade to the latest version. Good advice I’d say – I’ve just upgraded mine.

Permanent link to this article: https://baldric.net/2010/01/22/tor-server-compromise/

Jan 10 2010

are you /really/ sure you want that mobile phone

The launch of the google nexus one “iPhone killer” reminds me just how prescient Dr Fun’s cartoon of 16 January 2006 (see third cartoon down from the top on the right) really was.

I just love the way the google employee in the video says at the end that Verizon and Vodafone have “agreed to join our program”.

Oh yes indeed.

Permanent link to this article: https://baldric.net/2010/01/10/are-you-really-sure-you-want-that-mobile-phone/

Jan 02 2010

using scroogle

For completeness, my post below should have pointed to the scroogle search engine which purportedly allows you to search google without google being able to profile you. Neat idea if you must use google (why?) but it still fails the Hal Roberts test of what to do if the intermediate search engine is prepared to sell your data. I actually quite like the scroogle proxy though, particularly in its ssl version because anything that upsets google profiling has to be a good thing. Besides, the really paranoid can simply connect to scroogle via tor.

(Odd that google seem not to have tried to grab the scroogle domain name. If they do, let’s just hope that they get the groovle answer.)

Permanent link to this article: https://baldric.net/2010/01/02/using-scroogle/

Jan 02 2010

scroogled

One of the more annoying aspects of the web follows directly from one of its strengths. The web is actually designed to make it easy for authors to cross refer to the work of others – hyperlinking is intended to make linking between documents anywhere in web space seamless and transparent. Unfortunately, this cross linking ability leads to many posts (this one included) quoting directly from the source when referring to material elsewhere. In the academic world, quoting from source material is encouraged. When the work is properly attributed to the original author, then this is known as research. Without such attribution it is known as plagiarism.

So whenever I post or write here, I try hard to refer to original source material if I am quoting from elsewhere or I am referring to a particular tool or technique I have found useful. If I am writing about something commented on elsewhere (as for example, Hal Roberts’ discussion of GIFC selling user data in my posting about anonymous surfing), then I will try to link directly to the original material rather than to another article discussing that original. There are fairly good (and obvious) reasons for doing this, not least of which is that the original author deserves to be read directly and not through the (possibly distorting) lens of someone else’s words.

Writing for the web is a very different art to writing for print publication. Any web posting can easily become lazy as the author cross refers to other web posts. Many of those posts may be inaccurate or not primary source material. This can lead to the sort of problem commonly seen in web forums where umpteen people quote someone who said something about someone else’s commentary on topic X or Y. In such circumstances, finding the original, definitive, authoritative, source can be difficult.

Like most people, when faced with this sort of problem I resort to using one or more of the main search engines. But what to search for? Plugging in a simple quote from the original article can often bring up references to unrelated material which happens to include that same (or very similar) phrase. Worse, for reasons outlined above, the search can simply return multiple instances of postings in web fora about the article rather than the article itself. Most irritatingly these days I find that a search will lead to a wikipedia posting – and I just don’t trust the “wisdom of the crowds” enough to trust wikipedia. I’m old fashioned, I like my “facts” to be peer reviewed, authoritative, and preferably written in a form not subject to arbitrary post publication edits. Actually I still prefer dead trees as a trusted source of both factual material and fiction – which is one reason I have lost count of the number of books I have. I also like the reassuring way I can go to my bookshelf and know that my copy of 1984 will be where I left it and in a form in which I remember it.

So when I was researching older articles about Google recently and I wanted to find a copy of Cory Doctorow’s original short fiction piece about Google called “Scroogled” I expected to find umpteen thousand quotes as well as pointers to the original. I was wrong. I originally searched for the phrase “Want to tell me about June 1998?” on the grounds that that would be likely to give me a tighter set of results than simply looking for “scroogled”. This actually gave me fewer that sixty hits on clusty (the search engine I used at the time). I was initially reassured that most of the results were simple extracts of the full story with pointers to the original article on radaronline. Even Doctorow’s own blog points to radaronline without giving a local copy of the story. But then I discovered that radaronline no longer lists that article at that URL. Worse, a search of the site gives no results for “scroogled”. So Cory Doctorow’s creative commons licenced short has vanished from the original location and all I can find are copies. This worries me. Perhaps I’m wrong to rely on pointing to original material. What if the original is ephemeral? Or gets pulled for some reason? And if I point to copies, how can I be sure those copies are faithful to the original?

I actually fell foul of this same problem myself a couple of years ago when I was discussing my experiences with BT’s awful home hub router. I wrote in that post a reference to a contribution I made on another forum about my experiments with the FTP daemon on the hub whilst I was figuring out how to get a root shell. That article no longer exists, because the site no longer exists, and I have no copy.

So the web is both vast and surprisingly small and fragile in places.

Oh, just to be on the safe side, I have posted here a local (PDF) copy of scroogled obtained from feedbooks. You never know.

Permanent link to this article: https://baldric.net/2010/01/02/scroogled/

Dec 30 2009

shiny!

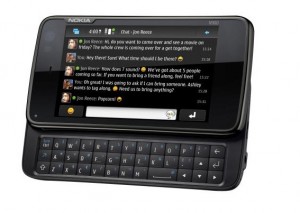

Well I finally cracked and ordered an N900 on-line just before Christmas. Nokia had been promising since about August of this year that the device “might” ship in the UK around October. Since then, the release date has slipped, and slipped, and slipped (much to the amusement of an iPhone using friend of mine who predicted exactly that back in August). Every time I read about a new impending release date I checked with the major independent retailers only to be told “no, not yet, maybe next month”.

Many review sites are now saying that Vodafone and T-Mobile will both be shipping the N900 on contract in January. Well, not according to the local retail outlets for those networks they won’t. And besides, I had no intention of locking myself in to a two year contract at around £35-£40 pcm, particularly if the network provider chose to mess about with the device in order to “customise” it. So, as I say, I cracked and ordered one on-line, unlocked and SIM free on 21 December. It arrived yesterday, which is pretty good considering the Christmas holiday period intervened.

So what is it like?

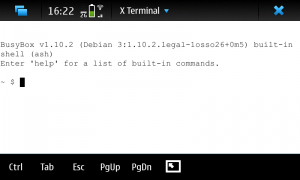

Well, there is a pretty good (if somewhat biased) technical description on the Nokia Maemo site itself, and that site also has a pretty good gallery of images of the beast so I recommend interested readers start there. There are also a number of (sometimes breathless) reviews scattered around the net, use your search engine of choice to find some. I won’t attempt to add much to that canon here. Suffice to say that I am a gadget freak and a fan of all things linux and open source. This device is a powerful, hand held ARM computer with telephony capability – and it runs a Debian derivative of linux. What more could you ask for?

Tap the screen to open the x-terminal and you drop in to a busybox shell.

Oh the joy!

So – first things first. Add the “Maemo Extras” catalogue to the application manager menu, then Install openSSH, add a root password and also install “sudo gainroot”. Stuff you Apple, I’ve got a proper smartphone (and, moreover, one which is unlikely to be hit by an SSH bot because a) I have added my own root password, and b) I have moved the SSH daemon to a non-standard port – just because I can). Now I can connect to my N900 from my desktop, but more importantly from my N900 to my other systems. Next on the agenda is the addition of OpenVPN so that I can connect back to my home network from outside. Having the power and portability of the N900 means that even my netbook is looking redundant as a mobile remote access device.

(Oh, and it’s a pretty good ‘phone too, if a little bulky).

[ update posted 16 March 2010 – This review at engadget.com is in my view well balanced and accurate. I have now had around three months usage from my N900 and I love its power and internet connectivity, but I have found myself carrying my old 6500 slide for use as a phone. I agree with engadget that the N900 is a work in progress. If I were designing a successor (N910?) personally I’d drop the keyboard (which I hardly ever use in practice) and save weight and thickness. ]

Permanent link to this article: https://baldric.net/2009/12/30/shiny/

Dec 12 2009

comment spam

I block comment spam aimed at this blog, and I insist that commenters leave some form of identification before I will allow a comment to be posted. Further, I use a captcha mechanism to keep the volume of spam down. Nevertheless, like most blogs, trivia attracts its fair share of attempted viagra ads, porn links and related rubbish. Most appears to come from Russia for some reason.

Periodically I review my spam log and clear it out – it can make for interesting, if ultimately depressing reading (when I can actually understand it). But one post today plucked at my heart strings. The poster, again from a Russian domain, said “Dear Author baldric.net ! I am final, I am sorry, but it does not approach me. There are other variants?”

I guess it lost something in the translation.

Permanent link to this article: https://baldric.net/2009/12/12/comment-spam/

Dec 12 2009

colossally boneheaded

David Adams over at OS News has posted an interesting commentary on Eric Schmidt’s recent outburst. Referring to Schmidt’s statement which I commented on below, Adams says:

I think the portion of that statement that’s sparked the most outrage is the “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place” part. That’s a colossally boneheaded thing to say, and I’ll bet Schmidt lives to regret being so glib, if he didn’t regret it within minutes of it leaving his mouth. As many people have pointed out, there are a lot of things you could be doing or thinking about that you don’t want other people to be watching or to know about, and that are not the least bit inappropriate for you to be doing, such as using the toilet, trying to figure out how to cure your hemorrhoids, or singing Miley Cyrus songs in the shower.

The post is worth reading in its entirety.

Permanent link to this article: https://baldric.net/2009/12/12/colossally-boneheaded/

Dec 07 2009

privacy is just for criminals

I’ve mentioned before that I value my privacy. I use tor, coupled with a range of other necessary but tedious approaches (such as refusing cookies, blocking ad servers, scrubbing my browser) to provide me with the degree of anonymity I consider my right in an increasingly public world. It is nobody’s business but mine if I choose to research the symptoms of bowel cancer or investigate the available statistics on crime clear up rates in Alabama. But according to Google’s CEO Eric Schmidt, my choosing to do so anonymously makes me at best suspect, and at worst possibly criminal. In an interview with CNBC, Schmidt reportedly said “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place,”

I have been getting increasingly worried about Google’s activities for a while now, but the breathtaking chutzpah of Schmidt’s statement is beyond belief. Lots of perfectly ordinary, law abiding, private citizen’s from a wide range of backgrounds and interests will use Google’s search capabilities in the mistaken belief that in so doing they are relatively anonymous. This has not been so for some long time now, but the vast majority of people just don’t know that. For the CEO of the company providing those services to suggest that a desire for privacy implies criminality is frankly completely unacceptable.

Just don’t use Google. For anything. Ever.

Permanent link to this article: https://baldric.net/2009/12/07/privacy-is-just-for-criminals/